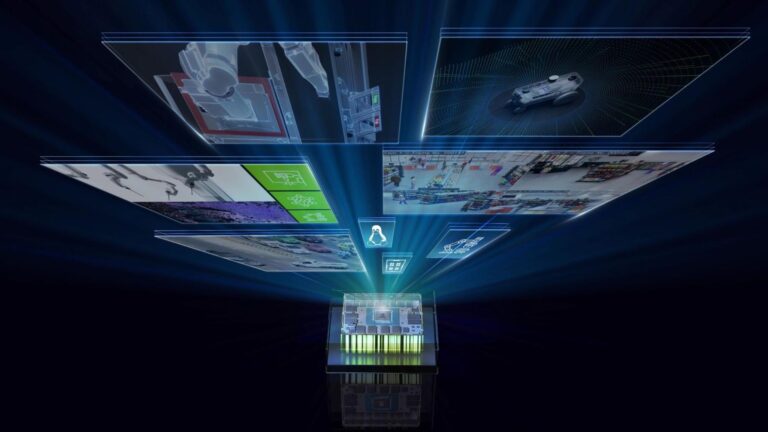

We’re excited to announce support for the Meta Llama 3 family of models in NVIDIA TensorRT-LLM, accelerating and optimizing your LLM inference performance. You…

We’re excited to announce support for the Meta Llama 3 family of models in NVIDIA TensorRT-LLM, accelerating and optimizing your LLM inference performance. You can immediately try Llama 3 8B and Llama 3 70B—the first models in the series—through a browser user interface. Or, through API endpoints running on a fully accelerated NVIDIA stack from the NVIDIA API catalog, where Llama 3 is packaged as…