Large language models (LLMs) provide a wide range of powerful enhancements to nearly any application that processes text. And yet they also introduce new risks,…

Large language models (LLMs) provide a wide range of powerful enhancements to nearly any application that processes text. And yet they also introduce new risks, including:

Prompt injection, which may enable attackers to control the output of the LLM or LLM-enabled application.

Information leaks, which occur when private data used to train the LLM or used at runtime can be inferred or extracted by an attacker.

LLM reliability, which is a threat when LLMs occasionally produce incorrect information simply by chance.

This post walks through these security vulnerabilities in detail and outlines best practices for designing or evaluating a secure LLM-enabled application.

Prompt injection

Prompt injection is the most common and well-known LLM attack. It enables attackers to control the output of the LLM, potentially affecting the behavior of downstream queries and plugins connected to the LLM. This can have additional downstream consequences or responses for future users. Prompt injection attacks can be either direct or indirect.

Direct prompt injection

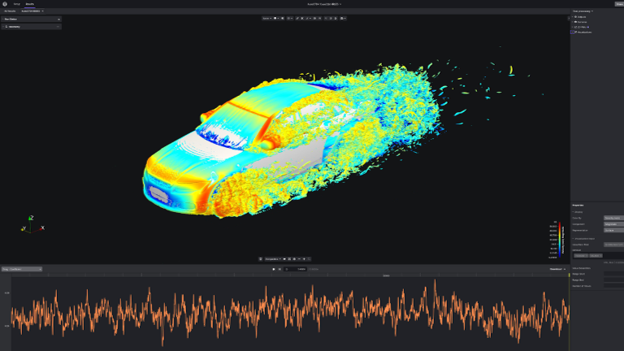

In the case of direct prompt injection attacks, the attacker interacts with the LLM directly, attempting to make the LLM produce a specific response. An example of a direct prompt injection leading to remote code execution is shown in Figure 1. For more details about direct prompt injection, see Securing LLM Systems Against Prompt Injection.

Figure 1. An example of a direct prompt injection attack in which an LLM-powered application is made to execute attacker code

Indirect prompt injection

Indirect prompt injection relies on the LLM having access to an external data source that it used when constructing queries to the system. An attacker can insert malicious content into these external data sources, which is ingested by the LLM and inserted into the prompt to produce the response desired by the attacker. For more information about indirect prompt injection, see Mitigating Stored Prompt Injection Attacks Against LLM Applications.

Trust boundaries

With both direct and indirect prompt injection, once the attacker is able to successfully introduce their input into the LLM context, they have significant influence (if not outright control) over the output of the LLM. Because the external sources that LLMs may use can be so difficult to control, and LLM users themselves may be malicious, it’s important to treat any LLM responses as potentially untrustworthy.

A trust boundary must be established between those responses and any responses that process them. Some practical steps to enforce this separation are listed below.

Parameterize plug-ins. Strictly limit the number of actions that a given plug-in can perform. For example, a plug-in to operate on a user’s email might require a message ID, a specific operation such as ‘reply’ or ‘forward’, or only accept free-form text to be inserted into the body of an email.

Sanitize inputs to the plug-in before use. Email body text might have any HTML elements forcibly removed before inserting, for example. Or a forward email operation might require that the recipient be present in the user’s address book.

Request explicit user authorization from the user when a plug-in operates on a sensitive system. Any such operation should result in an immediate re-request for explicit authorization from the user to perform the action, as well as provide a summary of the action that is about to be performed.

Require specific authorization from the user when multiple plug-ins are called in sequence. This pattern—allowing the output of one plug-in to be fed to another plug-in—can quite rapidly lead to unexpected and even dangerous behavior. Allowing the user to check and verify which plug-ins are being called and what action they will take can help mitigate the issue.

Manage plug-in authorization carefully. Separate any service account from the LLM service account. If user authorization is required for a plug-in’s action, then that authorization should be delegated to the plug-in using a secure method such as OAuth2.

Information leaks

Information leaks from the LLM and LLM-enabled applications create confidentiality risk. If an LLM is either trained or customized on private data, a skilled attacker can perform model inversion or training data extraction attacks to access data that application developers considered private.

Logging of both prompts and completions can accidentally leak data across permission boundaries by violating service-side role-based access controls for data at rest. If the LLM itself is provided access rights to information, or stores logs, it can often be induced into revealing this data.

Leaks from the LLM itself

The LLM itself can leak information to an attacker in several ways. With prompt extraction attacks, an attacker can use prompt injection techniques to induce the LLM to reveal information contained in its prompt template, such as model instructions, model persona information, or even secrets such as passwords.

With model inversion attacks, an attacker can recover some of the data used to train the model. Depending on the details of the attack, these records might be recovered at random, or the attacker may be able to bias the search to particular records they suspect might be present. For instance, they might be able to extract examples of Personal Identifiable Information (PII) used in training the LLM. To learn more, see Algorithms that Remember: Model Inversion Attacks and Data Protection Law.

Finally, training data membership inference attacks enable an attacker to determine whether a particular bit of information already known to them was likely contained within the training data of the model. For instance, they might be able to determine whether their PII in particular was used to train the LLM.

Fortunately, mitigation for these attacks is relatively straightforward.

To avoid the risk of prompt extraction attacks, do not share any information that the current LLM user is not authorized to see in the system prompt template. This may include information retrieved from a retrieval augmented generation (RAG) architecture. Assume that anything included in a prompt template is visible to a sufficiently motivated attacker. In particular, passwords, access tokens, or API keys should never be placed in the prompt, or anywhere else directly accessible to the LLM. Strict isolation of information is the best defense.

To reduce the risk of sensitive training data being extracted from the model, the best approach is simply to not train on it. Given enough queries, it is inevitable that the LLM will eventually incorporate some element of that sensitive data into its response. If the model must be able to use or answer questions about sensitive information, a RAG architecture may be a more secure approach.

In such an architecture, the LLM is not trained on sensitive documents, but is given access to a document store that is capable of 1) identifying and returning relevant sensitive documents to the LLM to assist in generation, and 2) verifying the authorization of the current user to access those documents.

While this avoids the need to train the LLM on sensitive data to produce acceptable results, it does introduce additional complexity to the application with respect to conveying authorization and tracking document permissions. This must be carefully handled to prevent other confidentiality violations.

If the sensitive data has already been trained into the model, then the risk can still be somewhat mitigated by rate-limiting queries, not providing detailed information about probabilities of the LLM completions back to the user, and adding logging and alerting to the application.

Restricting the query budget to the minimum that is consistent with the functionality of the LLM-enabled application, and not providing any detailed probability information to the final user, make both the inversion and inference attacks extremely difficult and time-consuming to execute.

Working with an AI Red Team to evaluate data leakage may be helpful in quantifying risk, setting appropriate rate limits for a particular application, and identifying queries or patterns of queries within user sessions that might indicate an attempt to extract training data that should be alerted on.

Application-related leaks

In addition to LLM-specific attacks, the novelty of LLMs can lead to more basic errors in constructing an LLM-enabled application. Logging of prompts and responses can often lead to service-side information leaks. Either a user who has not been properly educated introduces proprietary or sensitive information into the application, or the LLM provides a response based on sensitive information which is logged without the appropriate access controls.

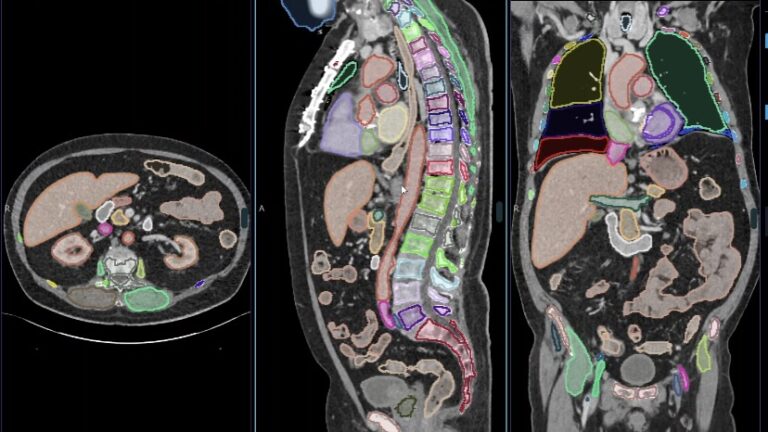

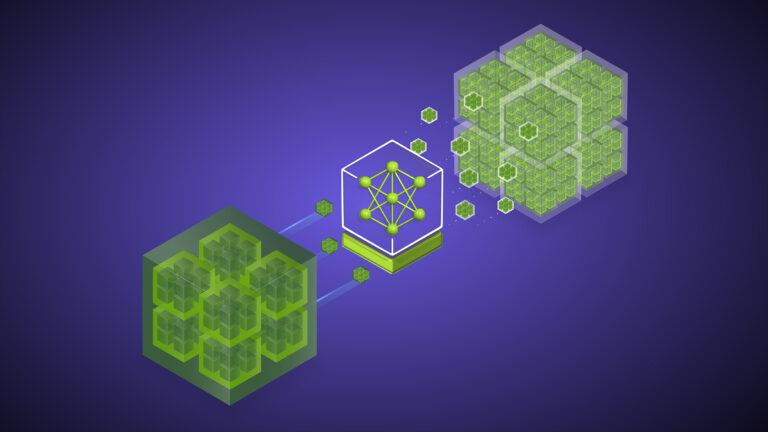

In Figure 2, a user makes a request to a RAG system, which requests documents the user alone is authorized to see on the user’s behalf in order to fulfill the request. Unfortunately, the request and response—which contain information related to the privileged document—are logged in a system with a different access level, thus leaking information.

Figure 2. An example of data leakage through logging

If RAG is being used to improve LLM responses, it’s important to track user authorization with respect to the documents being retrieved, and where the responses are being logged. The LLM should only be able to access documents that the current user is authorized to access. The completions (which by design incorporate some of the information contained in those access-controlled documents) should be logged in a manner such that unauthorized users cannot see the summaries of the sensitive documents.

It is therefore extremely important that authentication and authorization mechanisms are executed outside the context of the LLM. If relying on transmitting user context as part of the prompt, a sufficiently skilled attacker can use prompt injection to impersonate other users.

Finally, the behavior of any plug-ins should be scrutinized to ensure that they do not maintain any state that could lead to cross-user information leakage. For instance, if a search plug-in happens to cache queries, then the speed with which it returns information might allow an attacker to infer what topics other users of the application query most often.

LLM reliability

Despite significant improvements in the reliability and accuracy of LLM generations, they are still subject to some amount of random error. How words are sampled randomly from the set of possible next words increases the “creativity” of LLMs, but also increases the chance that they will produce incorrect results.

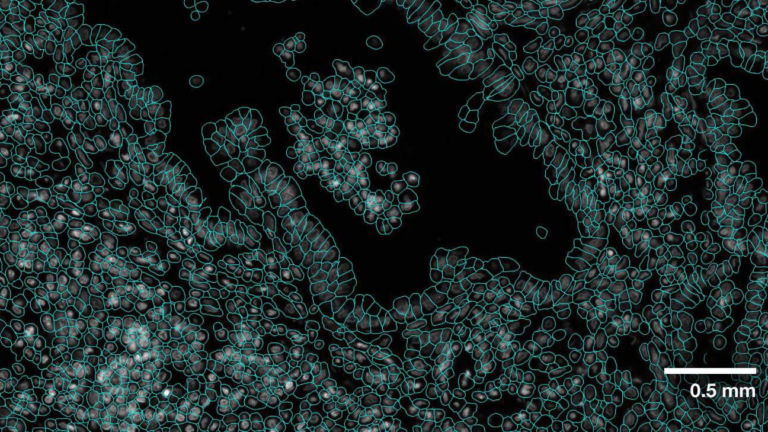

This has the potential to impact both users, who may act on inaccurate information, and downstream processes, plug-ins, or other computations that may fail or produce additional inaccurate results based on the inaccurate input (Figure 3).

Figure 3. An example of an LLM failing to complete a task and correctly answer a related question

Downstream processes and plug-ins must be designed with the potential for LLM errors in mind. As with prompt injection, good security design up front, including parameterization of plug-ins, sanitization of inputs, robust error handling, and ensuring that the user authorization is explicitly requested when performing a sensitive operation. All of these approaches help mitigate risks associated with LLMs.

In addition, ensure that any LLM orchestration layer can terminate early and inform the user in the event of an invalid request or LLM generation. This helps avoid compounding errors if a sequence of plugins is called. Compounding errors across LLM and plug-in calls is the most common way exploitation vectors are built for these systems. The standard practice of failing closed when bad data is identified should be used here.

User education around the scope, reliability, and applicability of the LLM powering the application is important. Users should be reminded that the LLM-enabled application is intended to supplement—not replace—their skills, knowledge, and creativity. The final responsibility for the use of any result, LLM-derived or not, rests with the user.

Conclusion

LLMs can provide significant value to both users and the organizations that deploy them. However, as with any new technology, new security risks come along with them. Prompt injection techniques are the best known, and any application, including an LLM, should be designed with that risk in mind.

Less familiar security risks include the various forms of information leaks that LLMs can create, which require careful tracing of data flows and management of authorization. The occasionally unreliable nature of LLMs must also be considered, both from a user reliability standpoint and from an application standpoint.

Making your application robust to both natural and malicious errors can increase its security. By considering the risks outlined in this post, and applying the mitigation strategies and best practices described, you can reduce your exposure to these risks and help ensure a successful deployment.

To learn more about attacking and defending machine learning models, check out the NVIDIA training at Black Hat Europe 2023.

Register for LLM Developer Day, a free virtual event on November 17, and join us for the session, Reinventing the Complete Cybersecurity Stack with AI Language Models.