NVIDIA Isaac Transport for ROS (NITROS) is the implementation of two hardware-acceleration features introduced with ROS 2 Humble-type adaptation and type…

NVIDIA Isaac Transport for ROS (NITROS) is the implementation of two hardware-acceleration features introduced with ROS 2 Humble-type adaptation and type negotiation.

Type adaptation enables ROS nodes to work in a data format optimized for specific hardware accelerators. The adapted type is used by processing graphs to eliminate memory copies between the CPU and the memory accelerator.

Through type negotiation, different ROS nodes in a processing graph can advertise their supported types and the ROS framework can choose data formats, resulting in ideal performance.

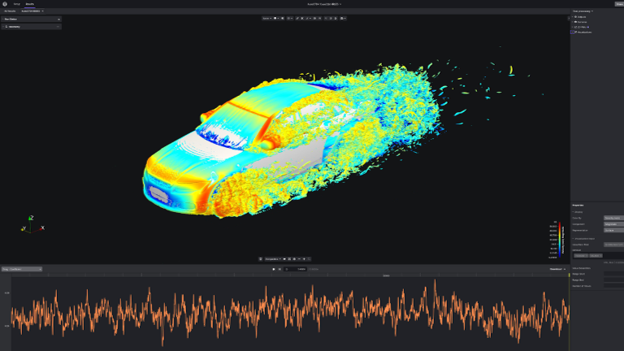

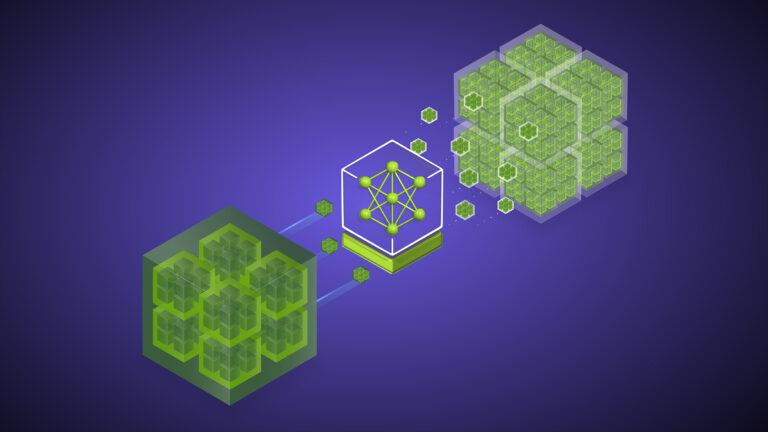

Figure 1. NITROS enables efficient acceleration by reducing memory copies between CPU and GPU

When two NITROS-capable ROS nodes are next to each other in a graph, they can discover each other through type negotiation and then use type adaptation for sharing data. Together, type adaptation and type negotiation significantly improve the performance of AI and computer vision tasks in ROS-based applications, by removing unnecessary memory copies.

This reduces CPU overhead and optimizes performance on the underlying hardware. Figure 1 shows efficient hardware acceleration using NITROS. Data is accessible from GPU memory instead of frequent CPU copies.

You can use a combination of NITROS-based Isaac ROS nodes and other ROS nodes in your processing graphs, as the ROS framework maintains compatibility with legacy nodes that don’t support negotiation. A NITROS-capable node functions like a typical ROS 2 node while communicating with a non-NITROS node. Most Isaac ROS GEMs are NITROS-accelerated.

Learn more about NITROS and system assumptions from NVIDIA NITROS docs.

NVIDIA CUDA with NITROS

NVIDIA CUDA is a parallel computing programming model that can drastically speed up functions in robotic systems with GPUs. Your custom ROS 2 nodes can use CUDA with NITROS through the Managed NITROS Publisher and Managed NITROS Subscriber.

CUDA code in a ROS node can share its output buffers in GPU memory with NITROS-capable Isaac ROS nodes using the Managed NITROS Publisher. This removes expensive CPU memory copies, improving performance as a result. NITROS also maintains compatibility with non-NITROS nodes, by publishing the same data as a normal ROS 2 message.

On the Subscriber side, CUDA code in a ROS node can receive input in GPU memory using the Managed NITROS Subscriber. Input can come from either a NITROS-capable Isaac ROS node or another CUDA-enabled ROS node using a NITROS Publisher. Just like the Managed NITROS Publisher, this gives better performance by increasing the parallel compute between the GPU and CPU.

To understand this better, let’s consider an example graph performing DNN-based point cloud segmentation. At a high level, these are the three main components using CUDA with NITROS:

Encoder node with Managed NITROS Publisher to convert a sensor_msgs/PointCloud2 message into a NitrosTensorList

Isaac ROS TensorRT node to perform DNN inference, taking in an input NitrosTensorList and producing an output NitrosTensorList

Decoder node with Managed NITROS Subscriber to convert the output NitrosTensorList into a segmented sensor_msgs/PointCloud2 message

The Managed NITROS Publisher and Subscriber offer a familiar interface, comparable to the standard rclcpp::Publisher and rclcpp::Subscriber APIs, making integration with existing ROS 2 nodes intuitive. CUDA with NITROS also enables a more modular software design. With Managed NITROS Publishers and Subscribers, CUDA nodes can be used anywhere in a graph with Isaac ROS nodes and other CUDA nodes to get the advantages of accelerated computing in each node.

Digging just a little deeper, NITROS is based on the NVIDIA Graphical eXecution Framework (GXF), an extensible framework for building high-performance compute graphs. NITROS leverages GXF to achieve efficient ROS application graphs. CUDA with NITROS removes the need for developers to understand the underlying workings of GXF as a prerequisite to making their nodes NITROS-capable. The GXF layer is abstracted away—making it easy and speedy for users to write ROS 2 nodes like they usually do, with straightforward tweaks to enable NITROS.

Learn more about the core concepts of CUDA with NITROS.

Currently, the Managed NITROS Publisher and Subscriber are only compatible with the Isaac ROS NitrosTensorList message type. Visit isaac_ros_nitros_type for a complete list of NITROS data types.

Object detection using CUDA with NITROS and YOLOv8

Isaac ROS provides a YOLOv8 sample showing how to use Managed NITROS utilities with your custom ROS decoders to take advantage of NITROS. This sample uses packages from Isaac ROS DNN Inference to perform TensorRT accelerated object detection using YOLOv8. The Managed NITROS Publisher and Subscriber use NITROS-typed messages and currently are only compatible with the Isaac ROS NitrosTensorList message type. This message type is used to share tensors between your nodes and Isaac ROS DNN Inference nodes.

Let’s say you want to use a custom object detection model with Isaac ROS DNN Inference and CUDA NITROS acceleration. There are three main steps involved in the detection pipeline: input image encoding, DNN inference, and output decoding. Isaac ROS DNN Inference has implementations for the first two steps.

In the decoding step, relevant information must be extracted from inferred results, which are tensors. For a task like 2D object detection, relevant information includes bounding boxes and class scores for each detected output in the image.

Let’s look into each step in some more detail.

Step 1: Encoding

On the input side, Isaac ROS provides a NITROS-accelerated DNN image encoder. This preprocesses input images and converts them into tensors, which are communicated through the isaac_ros_tensor_list type to the TensorRT or Triton nodes for inference.

You can specify parameters like image size and the input size your network expects, for various preprocessing functions like resizing. Note that you’ll need different encoders based on the task. For instance, you can’t use this image encoder with language models because the networks expect different input encodings.

Step 2: Inference

Isaac ROS provides two ROS nodes for DNN inference—the TensorRT node and Triton node. Of these, the YOLOv8 sample currently uses the TensorRT node. You provide your trained model to the TensorRT node, which performs inference and outputs a tensor containing detection results.

This output tensor list is passed onto the decoder node. You can specify parameters like dimensions and tensor names expected by the network—information that can be found easily from the ONNX model using tools like Netron.

Step 3: Decoding

The inferred output tensor from the TensorRT or Triton node must be parsed into the desired bounding box and class information. Let’s say you’ve written your model’s decoder as a ROS 2 node (not NITROS-capable yet). The decoder node doesn’t support NITROS-typed messages and expects a typical ROS 2 message from the inference node. This still works because NITROS maintains compatibility with non-NITROS nodes.

However, in this case, the output NITROS-typed message from the inference node (in GPU memory) is converted to a ROS 2 message and brought over to the CPU memory for the decoder to consume. This introduces some overhead as the data now lives in CPU memory, resulting in CPU memory copies while working with downstream ROS nodes.

Now let’s say you want to upgrade your decoder to communicate with the inference node (and other NITROS-accelerated nodes) through NITROS, instead of incurring the CPU memory copying cost. All the data stays in GPU memory in this case.

This is made easy by using Managed NITROS Subscriber in your decoder node. It subscribes to the NITROS-typed output message from the inference node and uses NITROS Views to obtain the CUDA buffer containing the detection output. You can then implement your decoding logic to this data and publish the results through an appropriate ROS message type.

The YOLOv8 decoder can be configured with parameters such as NMS threshold and confidence threshold to filter candidate detections. A simple visualization node can be used to subscribe to the resultant ROS message and draw bounding boxes on the input image. Note that Managed NITROS can only be integrated with CPP ROS 2 nodes.

Isaac ROS NITROS bridge

If your robotics applications are currently based on ROS 1, you can still get the benefits of accelerated computing using the newly released Isaac ROS NITROS bridge. This is also helpful for developers using ROS 2 versions where type adaptation and negotiation aren’t available (pre-Humble versions).

To highlight the speedups achievable, the NITROS bridge moves 1080p images between ROS 1 Noetic and NITROS packages up to 2.5x faster than the ROS 1 bridge.

The ROS bridge includes a CPU-based memory copy cost, which the Isaac ROS NITROS bridge eliminates by moving data from CPU to GPU. This data can be used in place in GPU memory.

NITROS bridge consists of two converter nodes. One is used on the ROS (for example Noetic) side and the other on the ROS 2 (for example Humble) side. Using the ROS bridge without NITROS converters results in images being sent from Noetic to Humble and back through copies across ROS processes in CPU memory, increasing latency. This problem is especially apparent between nodes sending huge amounts of data like segmented point clouds.

The NITROS bridge is designed with the goal of reducing end-to-end latency across ROS versions. Consider the same example, this time using NITROS converters. The converter on the Noetic side (Figure 10) moves the image to GPU memory, avoiding CPU memory copies over the bridge. The converter on the Humble side (Figure 10) converts the image in GPU memory to a NITROS image type that is compatible to be used with other NITROS-accelerated nodes.

Things work similarly in the reverse direction—with the image data being sent as a NITROS image from Humble through the converter on either side to an image in CPU-accessible memory in Noetic.

For more information about performance gains, visit Isaac ROS Benchmark for NITROS bridge and ros1_bridge. Note that the Isaac ROS NITROS bridge doesn’t support NVIDIA Jetson platforms yet.

Benefits of integrating ROS 2 nodes with NITROS

The following summarizes the many benefits of integrating your ROS 2 nodes with NITROS:

Improved performance by reducing CPU memory copies.

Compatibility with other non-NITROS ROS nodes such as RViz.

Easy integration of custom ROS 2 nodes with hardware-accelerated Isaac ROS nodes through Managed NITROS Publisher and Subscriber.

Modular software design using CUDA with NITROS.

Improved performance of applications based on earlier ROS versions using NITROS bridge.

Try accelerating your own ROS nodes using Isaac ROS NITROS and our YOLOv8 object detection sample!

Visit the NVIDIA Isaac ROS documentation page to learn more about our hardware-accelerated packages. Check out the Developer Forum for the latest information on Isaac ROS.