This week’s release features the NVIDIA-optimized Mamba-Chat model, which you can experience directly from your browser. This post is part of Model Mondays, a…

This week’s release features the NVIDIA-optimized Mamba-Chat model, which you can experience directly from your browser.

This post is part of Model Mondays, a program focused on enabling easy access to state-of-the-art community and NVIDIA-built models. These models are optimized by NVIDIA using TensorRT-LLM and offered as .nemo files for easy customization and deployment.

NVIDIA AI Foundation Models and Endpoints provides access to a curated set of community and NVIDIA-built generative AI models to experience, customize, and deploy in enterprise applications. If you haven’t already, try the leading models like Nemotron-3, Mixtral 8X7B, Llama 70B, and Stable Diffusion in the NVIDIA AI playground.

Mamba-Chat

The Mamba-Chat generative AI model, published by Haven, is a state-of-the-art language model that uses the state-space model architecture, distinguishing it from the traditional transformer-based models that previously dominated the field. This innovative approach enables Mamba-Chat to process longer sequences more efficiently, without the computational complexity that scales quadratically with the input length.

Instead, its architecture enables linear scaling with sequence length and incorporates a selective focus mechanism. This significantly enhances its ability to handle large-scale and complex datasets with unprecedented efficiency.

The 2.8B model has demonstrated impressive performance across various tasks. Mamba-Chat’s versatility is highlighted through its fine-tuning for specific applications, such as cybersecurity, showcasing its adaptability and potential in specialized knowledge domains.

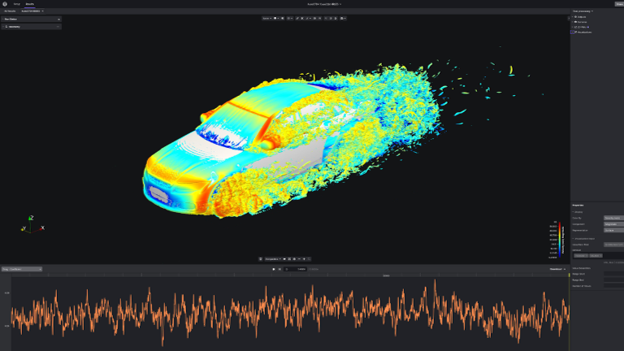

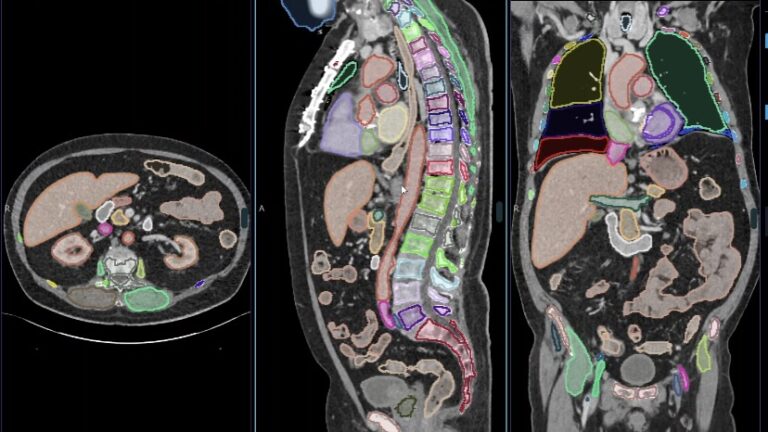

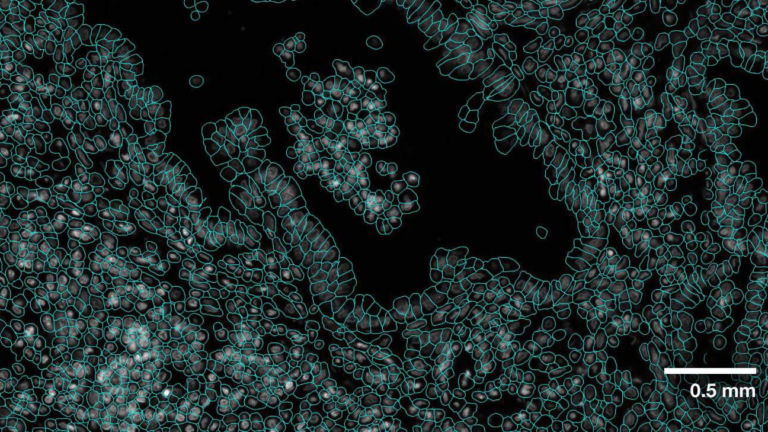

The model’s efficiency makes it particularly suitable for a wide range of applications, from chatbot interactions to complex data analysis in fields like genomics and time-series data analysis.

Experience the model

NVIDIA has optimized Mamba-Chat and now you can experience it directly from your browser through a simple user interface on the NGC catalog. In the Mamba-Chat playground, enter your prompts, and see the results generated from the model running on a fully accelerated stack.

You can also use the API to test the model. Sign in to the NGC catalog, then access NVIDIA cloud credits to experience the model at scale by connecting your application to the API endpoint.

Get started

NVIDIA AI Enterprise provides the security, support, stability, and manageability to improve the productivity of AI teams, reduce the total cost of AI infrastructure, and ensure a smooth transition from POC to production. Security, reliability, and enterprise support are critical when AI models are ready to deploy for business operations.

Try Mamba-Chat through the user interface or the API from the NGC catalog.