Data scientists are combining generative AI and predictive analytics to build the next generation of AI applications. In financial services, AI modeling and…

Data scientists are combining generative AI and predictive analytics to build the next generation of AI applications. In financial services, AI modeling and inference can be used for solutions such as alternative data for investment analysis, AI intelligent document automation, and fraud detection in trading, banking, and payments.

H2O.ai and NVIDIA are working together to provide an end-to-end workflow for generative AI and data science, using the NVIDIA AI Enterprise platform and H2O.ai’s LLM Studio and Driverless AI AutoML. H2O.ai also uses NVIDIA AI Enterprise to deploy next-generation AI inference, including large language models (LLMs) for safe and trusted enterprise-grade FinanceGPTs and custom applications at scale.

This integration aims to help organizations develop and deploy their own LLMs and customized models for various applications, beyond natural language processing (NLP), including image generation. These models enable the use of multiple modalities of content—such as text, audio, video, images, and code—to generate new content for broader applications.

There’s a pressing need for FSI firms to use generative AI and accelerate the innovation that leads to new product opportunities and reduced costs in operational areas. Both regulated (banks, broker-dealers, asset managers, and insurers) and unregulated (hedge funds and proprietary traders) financial institutions are working on big-picture solutions with the convergence of generative AI and data science applications.

This post provides an overview of different data science use cases and the tools to build integrated applications for the following progression areas as financial institutions adopt the latest AI models:

Newer generative AI and LLMs use cases

Accelerated inference for trading and risk

Intelligent automation and chatbot experiences

Data science and accelerated machine learning analytics on NVIDIA Triton Inference Server

Converged generative AI and predictive analytics

Generative AI and LLMs on accelerated inferencing for trading and risk

The holy grail of investing is to stay ahead of markets with real-time calculations and tap into alternative data sources, which are typically unstructured. 70% or more of organizational information is unstructured and is currently not tapped by organizations, as detailed in an IDC report on unstructured data.

Today, capital markets typically rely on traditional sources of tabular data called market data, which is stored in columns and rows. Newer, alternative data sources must be tapped to gain an information edge.

Alternative data sources

Alternative data is a category of unstructured information obtained from non-traditional sources with context unavailable through traditional market data. It can provide deeper insights, extend the fundamental analysis that is the qualitative process, and capture the behavioral finance element. Sources of alternative data can include any of the following:

Earnings call transcripts

Federal Reserve meeting notes

Social media

News

Satellite imagery

Financial filings

Alternative data uses this information to provide a deeper understanding of a company’s financial health or counterparty (Figure 2) for analyzing financial filings, which can be used in trading and risk management decisions. Investment leaders must contend with analyzing this unstructured alternative to make timely, informed decisions and stay ahead of the market.

Impact on trading and risk

With generative AI and predictive analytics converging, financial firms are using NVIDIA and H2O.ai integrations to build their own generative AI NLP models and LLMs for alternative data to serve internal needs and external customers. As a result, they are helping their customers outperform markets and work on their fiduciary duty to achieve excess returns and alpha while minimizing risk for decision-making.

One key use case for NLP LLMs with generative AI has been to isolate signals from unstructured data which is usually noisy and then index to structured data to drive insights through sentiment analysis, question and answer, and summarization (for example, your FirmGPT) for a universe of ticker symbols. This process can provide structured signals similar to fundamental analysis in financial markets that can be used for downstream models to meet risk and return goals.

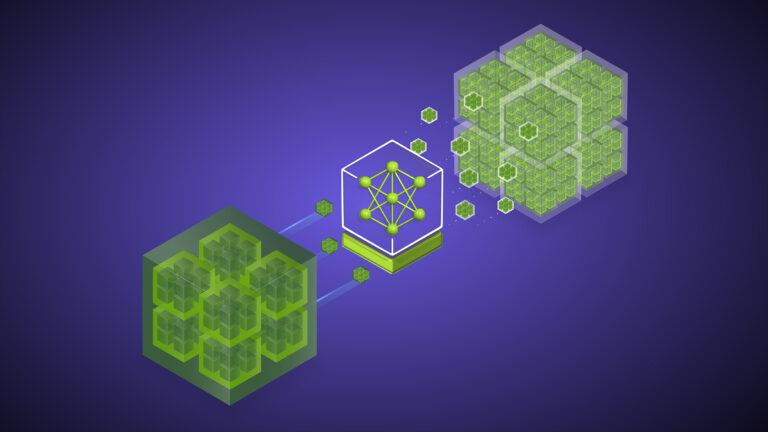

H2O.ai’s h2oGPT LLM integrated with NVIDIA Triton Inference Server, part of the NVIDIA AI Enterprise platform, can provide quick, generative AI LLMOps ability to data scientists to train and productionalize applications at a lower cost of operation since customers can train and deploy multiple models within their enterprises.

Organizations can use foundational LLMs on unstructured sources of information, such as financial news, along with customization techniques for understanding the financial domain better.

End users can interface with customized models to source and validate ground truth responses by using retrieval-augmented generation (RAG).

H2O.ai’s LLM solutions running on NVIDIA Triton Inference Server showed accelerated inference with more tokens and lower latency.

Figure 3 shows the reduced total cost of ownership (TCO) with increased return on investment (ROI) for deploying and operating multiple LLMs. For more information, see NVIDIA TensorRT-LLM Enhancements Deliver Massive Large Language Model Speedups on NVIDIA H200.

Generative AI for intelligent automation and chatbot experiences

Generative AI LLMs are also used for chatbots for internal employees and external customer experiences. Chatbots help with organizational productivity and operations, generating cost savings, increasing profit margins, and driving enhanced customer experience with customer churn.

In customer experiences through generative AI, the goal is to enable hyper-personalization and satisfaction (Figure 4), increasing the net promoter score (NPS) and customer satisfaction score (CSAT) for your organization.

For internal employee experiences, AI tools enhance productivity by helping to relay information to management for decision-making insights. Externally, AI tools can be used to cater to customers through agent assistants.

Customer Spotlight: Commonwealth Bank of Australia

You can use H20.ai guardrails and NVIDIA NeMo Guardrails with tools such as langchain to develop more powerful custom GPT models with the maximum flexible options. H20.ai also has model governance, guardrails, and explainability tools that help with model explanations, all for use by customers in regulated environments.

H2O.ai has democratized generative AI so that enterprises can safely build their own state-of-the-art LLMs. Commonwealth Bank of Australia has used this to build its own GPT, tailored to what its customers need.

Both NVIDIA and H20.ai believe every organization needs to own its LLM and GPT just as they need to own their brand, data, algorithms, and models. Open-source generative AI is bringing transformation to democratizing value from AI while preserving data, code, and content ownership.

“We have a shared vision with H2O.ai for democratizing AI responsibly and, through our partnership, we are now able to create ground-up generative AI solutions that allow us to have true control of the way we use data, techniques, and training. Responsible AI is not only a question of bias and explainability. It is also about the accountability you take: for the way you develop it and the outcomes you deliver,” said Dan Jermyn, chief decision scientist at the Commonwealth Bank of Australia.

Data science and accelerated machine learning analytics on NVIDIA Triton Inference Server

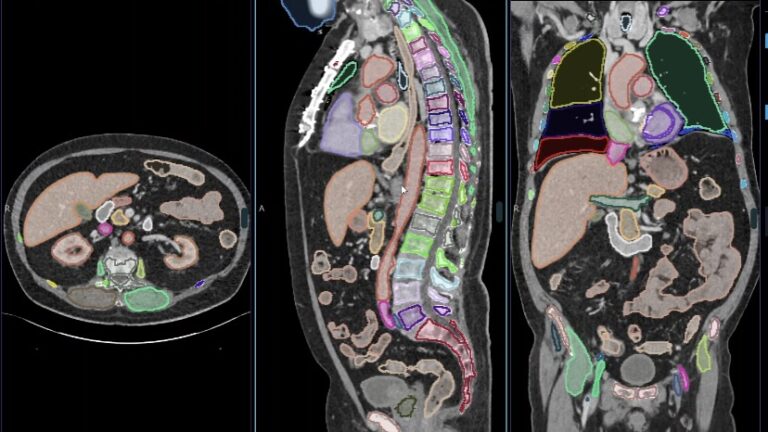

AI is revolutionizing risk assessment for credit, fraud modeling, and risk management using predictive techniques and algorithms such as XGBoost. Accelerated machine learning is applied in financial services for use cases such as predicting limit order book prices, underwriting credit products, and detecting fraud in financial transactions.

A fraud prediction pipeline contains not just one model but a series tied together, enabling more dynamic abilities to provide insights into prospective customers. Many fraud models are based on behavioral attributes for various segments (Figure 5):

Geographic location vs. where the transaction happened

Transaction type (card present vs. card not present)

Average transaction size

Average transaction frequency over varied time windows and time aggregations

For example, 30/60/90 days vs. weekly/monthly/quarterly/annually

These are based on various business rules or predictive feature characteristics. For example, if the same merchant is running increasingly large transactions over a short period, then the model algorithm can flag the transaction for potential fraud.

In anti-money laundering (AML) and transaction fraud, the key is to know your customer (KYC) and know your customer’s customer (Customer). You establish their identity and understand the behavioral attributes of customers and other agents, including fraud perpetrators.

In each set of models, you understand more about the customers and actors using AI techniques, such as intelligent automation. This lets you understand whether the customer’s identity was breached to assign a better score to the transaction. Better scores help with the reliability of the transaction and provide a safe and secure experience to customers. Transactions are stopped based on the identity verification key for KYC and behavioral attributes, and whether fraudsters or real customers are performing the transaction.

AML and fraud detection empower financial institutions to stay ahead of emerging threats. Multiple factors play a key role in preventing fraud in financial applications:

Intelligent document automation based on generative AI models where more diverse information is processed for identity

Behavioral attributes

Traditional data science

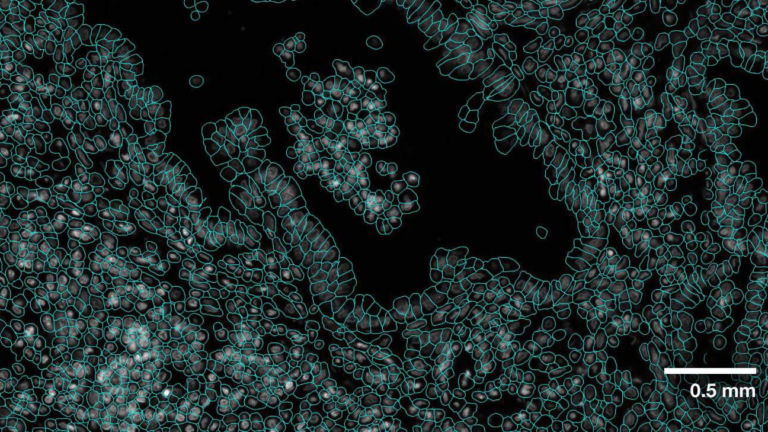

H2O.ai Driverless AI has integrated NVIDIA Triton Inference Server, RAPIDS cuML, and RAPIDS cuDF (Figure 6), enabling financial institutions to accelerate their fraud detection applications.

Converged generative AI and predictive analytics

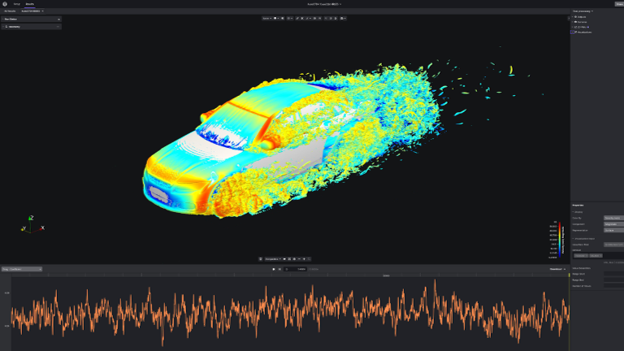

We are increasingly seeing a convergence of data science and AI requirements from end customers. AI applications based on LLMs, even if they are based on unstructured, multi-modal data like NLP, image, and audio (Figure 7), have to be tied to tabular data science solutions. End financial solutions use a combination of data science techniques and accelerated predictive analytics such as XGBoost with upcoming generative AI solutions.

There is a convergence of new generative AI greenfield solutions with legacy brownfield data science solutions to make end-user applications more productive and AI-savvy.

Customer spotlight: North American Bancard

This is reflected at North American Bancard (NAB), which is exploring opportunities to seamlessly integrate more of H2O.ai’s next-gen LLM-powered applications into its operations driven by a shared alignment on goals and vision. This partnership promises to yield significant benefits in optimizing NAB’s operations, streamlining its business processes, and automating internal workflows. Ultimately, this enables greater fulfillment and productivity.

“H2O.ai consistently demonstrates a vested interest in our continued success and is ready to explore new ways to leverage LLM-powered solutions to enable greater fulfillment and productivity,” said Jeffrey Vagg, chief data and analytics officer at NAB.

Vagg envisions that generative AI has the potential to elevate NAB’s performance, enhance merchant support, and bolster fraud detection capabilities, effectively identifying malicious actors attempting to exploit vulnerabilities within the payments industry.

“We believe every organization can safely create and own their own LLMs to bring transformation to their customers,” Vagg added. The depth of trust that NAB instills in H2O.ai is proving instrumental in navigating the intricacies of generative AI.

Working with H20.ai, NVIDIA provides those key elements needed for customer success and aligned goals for creating their own LLMs and customization.

Blocking issues and solutions

Financial firms tend to fall into two camps: regulated and unregulated. Regulated institutions are typically large financial institutions like banks that deploy AI at scale across multiple business units. On the other end, unregulated institutions include hedge funds and proprietary trading firms, which are more advanced in adopting technologies. Though institutions initially lagged in technology adoption, the gap is narrowing.

Another blocker for AI adoption in financial services has been the ability to customize AI models to the financial domain and financial applications to deliver better accuracy. Traditionally, legacy data science applications are based on tabular data and is required for banks to productionalize these models in a compliant manner.

Both regulated and unregulated institutions want to be able to harness their data science resources, which are scarce and widely sought after. They want to build financial AI applications with maximum accuracy for the financial domain and make the best use of their time and productivity.

As the customer stories show, both customers have adopted AI LLM Ops and MLOPs solutions and need productivity tools to get to enterprise production use cases faster in financial services. This needs development productivity accelerators that can bring together such diverse solutions, integrating generative AI with data science and analytic models into a single application workflow.

H20.ai and NVIDIA provide the ability to develop integrated financial domain-specific applications in a shorter period, harnessing the ability of NVIDIA AI Enterprise with H20.ai LLM Studio and H20.ai Driverless. NVIDIA AI Enterprise supports accelerated, high-performance inference with

NVIDIA Triton Inference Server

Other NVIDIA AI software

H2O.ai’s LLM Studio is an open-source, no-code solution that can empower organizations to fine-tune and evaluate LLMs. Financial institutions can customize models to their domain with NVIDIA NeMo, a framework that provides the ability to fine-tune and supervise models with various customizations such as prompt learning, instruction tuning, and RLHF (Figure 8).

h2oGPT APIs are sustainably built on open source–the most responsible way forward for regulated industries like financial services.

Developers have total control and customization over their AI models. This level of customization and control is unmatched by anything in the market today.

Models and a customization framework can efficiently accomplish tasks for your domain with your own intellectual property ready for distribution and monetization at a fraction of the cost to customize, run, and operate against other proprietary LLM models.

Customers can use h2oGPT built-in guardrails along with NeMo Guardrails, to empower enterprise AI use cases.

NVIDIA NeMo Retriever

With H20.ai’s built-in RAG, you can seamlessly integrate generative AI models into your existing data store. H20.ai, along with NVIDIA NeMo Retriever, can use NVIDIA-optimized RAG capabilities.

NeMo Retriever is a generative AI microservice that lets enterprises connect custom LLMs to enterprise data to deliver highly accurate responses for their AI applications and provide more accurate responses. It is part of the NVIDIA AI Enterprise software platform.

Developers using the microservice can connect their AI applications to business data wherever it resides, bringing the compute and software tooling applications to your data. This can help companies develop and run NVIDIA-accelerated inference applications on virtually any data center or cloud.

Summary

NVIDIA and H20.ai together offers the maximum flexibility and tools to develop generative AI applications to end customers. This offers a quick pathway to develop and productionalize converged AI and data science applications at a fast pace, solving a significant bottleneck to enterprises.

As a result, you can develop your own LLMs where the intellectual property and the ability to monetize and redistribute resides with you. You can turn existing investments, data, and resources into revenue centers, realizing returns out of AI investments and subsequently investing in further AI projects.

Together, this maximizes ROI, lowers TCO, and offers enhanced productivity with the flexibility and choice in tooling and framework to build converged applications, offering unprecedented value to our end customers.