Consider a large language model (LLM) application that is designed to help financial analysts answer questions about the performance of a company. With a…

Consider a large language model (LLM) application that is designed to help financial analysts answer questions about the performance of a company. With a well-designed retrieval augmented generation (RAG) pipeline, analysts can answer questions like, “What was X corporation’s total revenue for FY 2022?” This information can be easily extracted from financial statements by a seasoned analyst.

Now consider a question like, “What were the three takeaways from the Q2 earnings call from FY 23? Focus on the technological moats that the company is building”. It is immediately apparent that this information requires more than a simple lookup from an earnings call. This inquiry requires planning, tailored focus, memory, and breaking down a complex question into simpler sub-parts. This is also the type of question a financial analyst would want answered to include in their reports but would need to invest time to answer.

This is where a conversation about agents is needed.

In this post, I introduce LLM-powered agents and discuss what an agent is and some use cases for enterprise applications. For more information, see Building Your First Agent Application. In that post, I offer an ecosystem walkthrough, covering the available frameworks for building AI agents and a getting started guide for anyone experimenting with question-and-answer (Q&A) agents.

What is an AI agent?

While there isn’t a widely accepted definition for LLM-powered agents, they can be described as a system that can use an LLM to reason through a problem, create a plan to solve the problem, execute the plan and re-evaluate the plan if issues are during execution.

In short, agents are a system with complex reasoning capabilities, memory, and the means to execute tasks.

This capability was first observed in projects like AutoGPT or BabyAGI, where complex problems were solved without much intervention. To describe agents a bit more, here’s the general architecture of an LLM-powered agent application (Figure 1).

An agent is made up of the following key components:

Agent core

Memory module

Tools

Planning module

Each component has some degree of complexity that is essential to grasp when considering the inner workings of an agent.

Agent core

The agent core is essentially an LLM that follows instructions:

General goals and instructions

Instructions for using tools for execution

Explanation for how to make use of the planning module

Directions for accessing memory

Persona design

Assigning a persona is an optional step for AI agents, used when needed to imbue a personality or general behavioral descriptions. For instance, to build an agent that can be used to generate code, you can give it the anthropomorphic persona of a software engineer, and ask it to try emulating the behavior and reasoning process of a typical software developer (Figure 2).

This persona description is typically used to either bias the model to prefer using certain types of tools or to imbue typical idiosyncrasies in the agent’s final response.

Persona imbuement doesn’t negate the need for a planning module. Rather, it just changes the semantics of the responses given by the AI agent.

Memory module

Memory modules play a critical role in AI agents. A memory module can essentially be thought of as a store of logs of the agent’s thoughts and interactions with a user.

There are two types of memory modules:

Short-term memory: A ledger of actions and thoughts that an agent goes through to attempt to answer a single question from a user: the agent’s “train of thought.”

Long-term memory: A ledger of actions and thoughts about events that happen between the user and agent. It is a log book that contains a conversation history stretching across weeks or months.

Memory requires more than semantic similarity-based retrieval. Typically, a composite score is made up of semantic similarity, importance, recency, and other application-specific metrics. It is used for retrieving specific information.

Tools

Tools are well-defined executable workflows that agents can use to execute tasks. Oftentimes, they can be thought of as specialized third-party APIs:

RAG pipelines

A calculator

A code interpreter

APIs for Outlook

Search APIs

Weather APIs

Any other well-defined LLM pipeline

In some cases, even other agents can be tools.

Planning module

Complex problems, such as analyzing a set of financial reports to answer a layered business question, often require nuanced approaches. With an LLM–powered agent, this complexity can be dealt with by using a combination of two techniques:

Task and question decomposition

Reflection or critic

Task and question decomposition

Compound questions or inferred information require some form of decomposition. Take, for instance, the question, “What were the three takeaways from the last all-hands company meeting at NVIDIA?”

The information required to answer this question is not directly extractable from the transcript of a 2-hour long meeting. However, the problem can be broken down into multiple question topics:

“Which technological shifts were discussed the most?”

“Is there a change in hiring policy?”

“What is the general employee sentiment?”

Each of these questions can be further broken into subparts. That said, a specialized AI agent must guide this decomposition.

Reflection or critic

Techniques like ReAct, Reflexion, Chain of Thought, and Graph of thought have served as critic– or evidence-based prompting frameworks. They have been widely used to improve the reasoning capabilities and responses of LLMs. These techniques can also be used to refine the execution plan generated by the agent.

Agents for enterprise applications

While the full extent of possible applications for agents is extensive, the following applications show promise in enterprise settings:

Question-answering agent

Swarm of agents

Recommendation and experience design agents

Customized AI author agents

Multi-modal agents

This compilation is a result of mining community sentiment and extracting information from applications, along with new startups forming in the ecosystem.

Question-answering agent

“Talk to your data” isn’t a simple problem. There are a lot of challenges that a straightforward RAG pipeline can’t solve:

Semantic similarity of source documents

Complex data structures, like tables

Lack of apparent context (not every chunk contains markers for its source)

The complexity of the questions that users ask

…and more

For instance, go back to the prior earning’s call transcript example (Q3, 2023 | Q1 2024). How do you answer the question, “How much did the data center revenue increase between Q3 of 2023 and Q1 of 2024?” To answer this question, you essentially must answer three questions individually:

What was the data center revenue in Q3 of 2023?

What was the data center revenue in Q1 of 2024?

What was the difference between the two?

In this case, you would need an agent that has access to a question-decomposition module to plan out the sub-questions, a RAG pipeline (used as a tool) to retrieve specific information, and memory modules to accurately handle the subquestions. In the LLM-Powered Agents: Building Your First Agent Application post, I go over this type of case in detail.

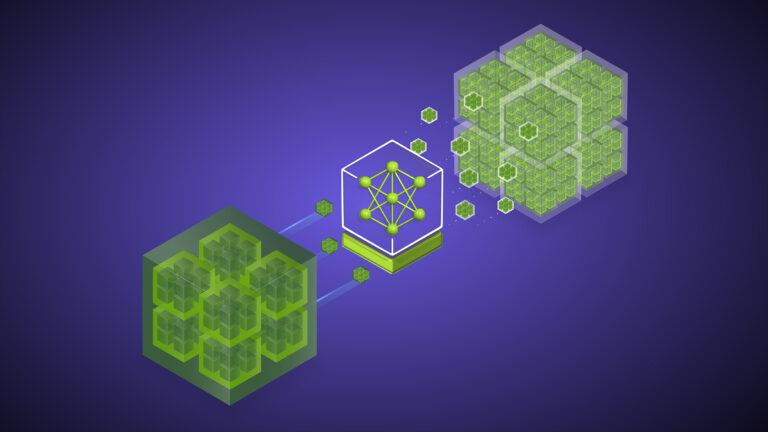

Swarm of agents

Multi-agent environments like Generative Agents and ChatDev have been extremely popular with the community (Figure 3). Why? Frameworks like ChatDev enable you to build a team of engineers, designers, product management, CEO, and agents to build basic software at low costs. Simple games like Flappy Birds can be re-created for 50 cents!

With a swarm of agents, you can populate a digital company, neighborhood, or even a whole town for applications like behavioral simulations for economic studies, enterprise marketing campaigns, UX elements of physical infrastructure, and more.

These applications are currently not possible to simulate without LLMs and are extremely expensive to run in the real world.

Recommendation and experience design agents

The internet works off recommendations. Conversational recommendation systems powered by agents can be used to craft personalized experiences.

For example, consider an AI agent on an e-commerce website that helps you compare products and provides recommendations based on your general requests and selections. A full concierge-like experience can also be built, with multiple agents assisting an end user to navigate a digital store. Experiences like selecting which movie to watch or which hotel room to book can be crafted as conversations—and not just a series of decision-tree-style conversations!

Customized AI author agents

Another powerful tool is having a personal AI author that can help you with tasks such as co-authoring emails or preparing you for time-sensitive meetings and presentations. The problem with regular authoring tools is that different types of material must be tailored according to various audiences. For instance, an investor pitch must be worded differently than a team presentation.

Agents can harness your previous work. Then, you have the agent mold an agent-generated pitch to your personal style and customize the work according to your specific use case and needs. This process is often too nuanced for general LLM fine-tuning.

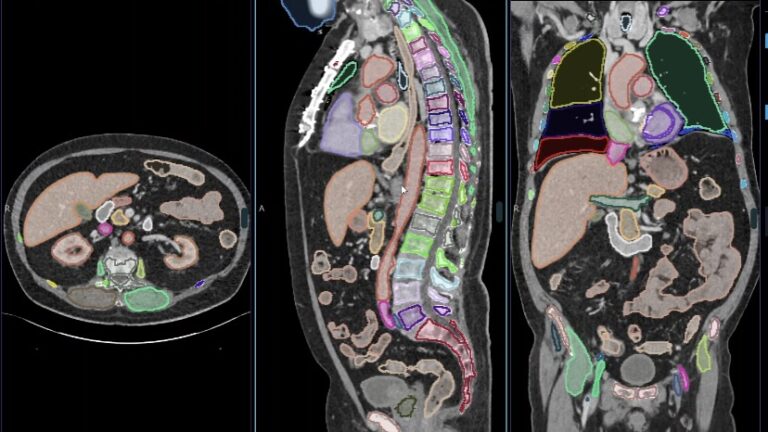

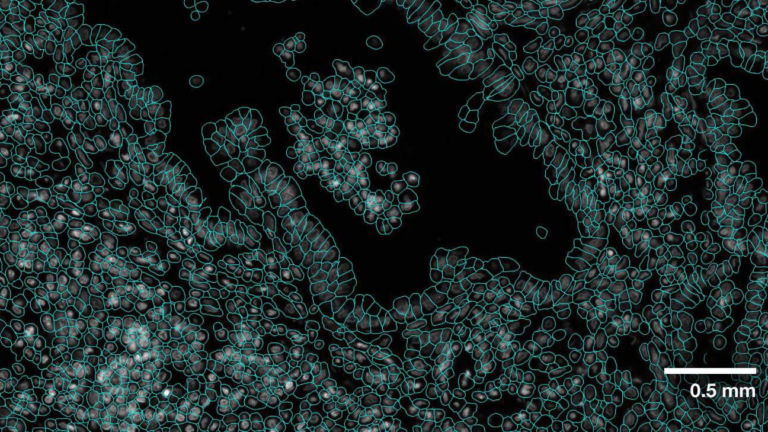

Multi-modal agents

With only text as an input, you cannot really “talk to your data.” All the mentioned use cases can be augmented by building multi-modal agents that can digest a variety of inputs, such as images and audio files.

That was just a few examples of directions that can be pursued to solve enterprise challenges. Agents for data curation, social graphs, and domain expertise are all active areas being pursued by the development community for enterprise applications.

What’s next?

LLM-powered agents differ from typical chatbot applications in that they have complex reasoning skills. Made up of an agent core, memory module, set of tools, and planning module, agents can generate highly personalized answers and content in a variety of enterprise settings—from data curation to advanced e-commerce recommendation systems.

For an overview of the technical ecosystem around agents, such as implementation frameworks, must-read papers, posts, and related topics, see Building Your First Agent Application. The walkthrough of a no-framework implementation of a Q&A agent helps you better talk to your data.