As large language models (LLMs) become more powerful and techniques for reducing their computational requirements mature, two compelling questions emerge….

As large language models (LLMs) become more powerful and techniques for reducing their computational requirements mature, two compelling questions emerge. First, what is the most advanced LLM that can be run and deployed at the edge? And second, how can real-world applications leverage these advancements?

Running a state-of-the-art open-source LLM like Llama 2 70B, even at reduced FP16 precision, requires more than 140 GB of GPU VRAM (70 billion parameters x 2 bytes = 140 GB in FP16, plus more for KV Cache). For most developers and smaller companies, this amount of VRAM is not easily accessible. Additionally, whether due to cost, bandwidth, latency, or data privacy issues, application-specific requirements may preclude the option of hosting an LLM using cloud computing resources.

NVIDIA IGX Orin Developer Kit and NVIDIA Holoscan SDK address these challenges, bringing the power of LLMs to the edge. The NVIDIA IGX Orin Developer Kit coupled with a discrete NVIDIA RTX A6000 GPU delivers an industrial-grade edge AI platform tailored to the demands of industrial and medical environments. This includes NVIDIA Holoscan, an SDK that harmonizes data movement, accelerated computing, real-time visualization, and AI inferencing.

This platform enables developers to add open-source LLMs into edge AI streaming workflows and products, offering new possibilities in real-time AI-enabled sensor processing while ensuring that sensitive data remains within the secure boundaries of the IGX hardware.

Open-source LLMs for real-time streaming

The recent rapid development of open-source LLMs has transformed the perception of what is possible in a real-time streaming application. The prevailing perception was that any application requiring human-like abilities was exclusive to closed-source LLMs powered by enterprise-grade GPUs at data center scale. However, thanks to the recent surge in the performance of new open-source LLMs, models such as Falcon, MPT, and Llama 2 can now provide a viable alternative to closed-source black-box LLMs.

There are many possible applications that can make use of these open-source models on the edge, and many of them involve the distillation of streaming sensor data into natural language summaries. Such possibilities include real-time monitoring of surgical videos to keep families informed of progress, summarizing recent radar contacts for air traffic controllers, and even converting the play-by-play commentary of a soccer match into another language.

Access to powerful, open-source LLMs has also inspired a community devoted to refining the accuracy of these models, as well as reducing the computation required to run them. This vibrant community is active on the Hugging Face Open LLM Leaderboard, which is updated often with the latest top-performing models.

AI capabilities at the edge

The NVIDIA IGX Orin platform is uniquely positioned to leverage the surge in available open-source LLMs and supporting software. The NVIDIA RTX A6000 GPU provides an ample 48 GB of VRAM, enabling it to run some of the largest open-source models. For example, a version of Llama 2 70B whose model weights have been quantized to 4 bits of precision, rather than the standard 32 bits, can run entirely on the GPU at 14 tokens per second.

Diverse problems and use cases can be addressed by the robust Llama 2 model, bolstered by the security measures of the NVIDIA IGX Orin platform, and seamlessly incorporated into low-latency Holoscan SDK pipelines. This convergence not only marks a significant advancement in AI capabilities at the edge, but also unlocks the potential for transformative solutions across various domains.

One notable application could capitalize on the newly introduced Clinical Camel, a fine-tuned Llama 2 70B variant specialized in medical knowledge. By creating a localized medical chatbot based on this model, it ensures that sensitive patient data remains confined within the secure boundaries of the IGX hardware. Applications where privacy, bandwidth concerns, or real-time feedback are critical are where the IGX platform really shines.

Imagine inputting a patient’s medical records and querying the bot to discover similar cases, gaining fresh insights into hard-to-diagnose patients, or even equipping medical professionals with a short list of potential medications that circumvents interactions with existing prescriptions. All of this could be automated through a Holoscan application that converts real-time audio from physician-patient interactions into text and seamlessly feeds it into the Clinical Camel model.

Expanding on its potential, the NVIDIA IGX platform extends the capabilities of LLMs beyond text-only applications, thanks to its exceptional optimization for low-latency sensor input. While the medical chatbot presents a compelling use case, the power of the IGX Orin Developer Kit is its capacity to seamlessly integrate real-time data from various sensors.

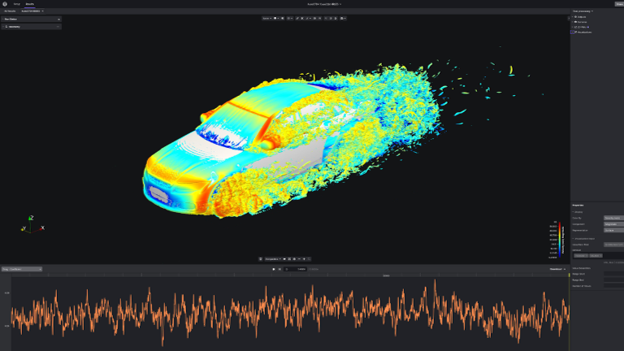

Designed for edge environments, the IGX Orin can process streaming information from cameras, lidar sensors, radio antennas, accelerometers, ultrasound probes, and more. This versatility empowers cutting-edge applications that seamlessly merge LLM prowess with real-time data streams.

Integrated into Holoscan operators, these LLMs have the potential to significantly enhance the capabilities and functionalities of AI-enabled sensor processing pipelines. Specific examples are detailed below.

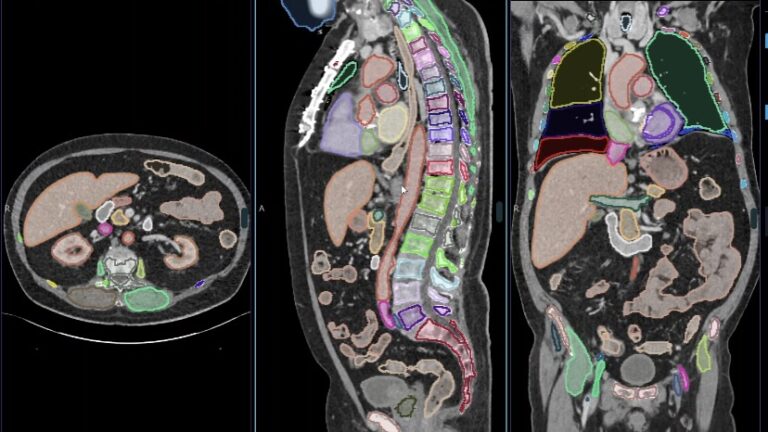

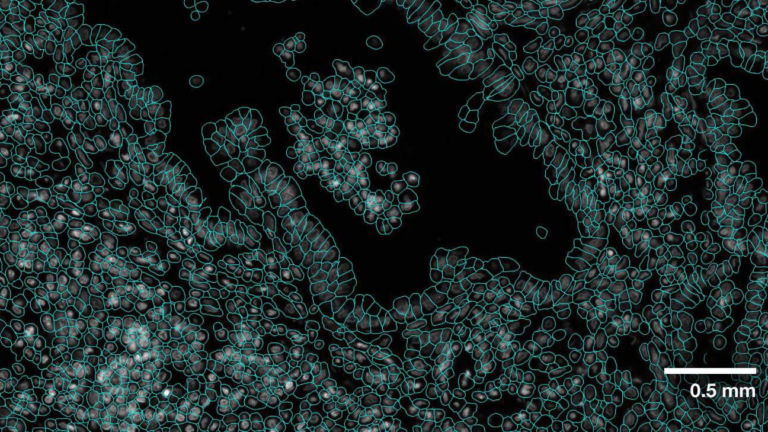

Multimodal medical assistants: Enhance LLMs with the capability to interpret not only text but also medical imaging, as demonstrated by projects like Med-Flamingo, which interprets MRIs, x-rays, and histology images.

Figure 2. LLMs can interpret text and extract relevant insights from medical images

Signals Intelligence (SIGINT): Derive natural language summaries from real-time electronic signals captured by communication systems and radars, providing insights that bridge technical data and human understanding.

Surgical case note generation: Channel endoscopy video, audio, system data, and patient records to multimodal LLMs to generate comprehensive surgical case notes that are automatically uploaded to a patient’s electronic medical records.

Smart agriculture: Tap into soil sensors monitoring pH, moisture, and nutrient levels, enabling LLMs to offer actionable insights for optimized planting, irrigation, and pest control strategies.

Software development copilots for education, troubleshooting, or productivity enhancement agents are another novel use case of LLMs. These models help developers to develop more efficient code and thorough documentation.

The Holoscan team recently released HoloChat, an AI-driven chatbot serving as a developer’s copilot in Holoscan development. It generates human-like responses to questions about Holoscan and writing code. For details, visit nvidia-holoscan/holohub on GitHub.

The HoloChat local hosting approach is designed to provide developers with the same benefits as popular closed-source chatbots while eliminating the privacy and security concerns associated with sending data to third-party remote servers for processing.

Model quantization for optimal accuracy and memory usage

With the influx of open-source models being released under Apache 2, MIT, and commercially viable licenses, anyone can download and use these model weights. However, just because this is possible, does not mean that it is feasible for the vast majority of developers.

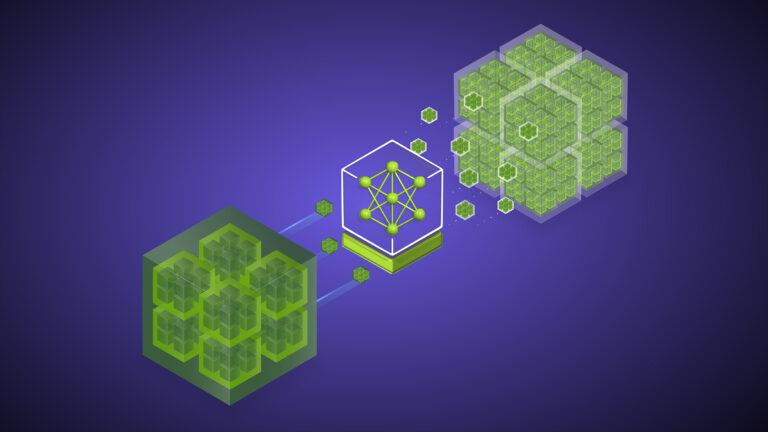

Model quantization provides one solution. Model quantization reduces the computational and memory costs of running inference by representing the weights and activations as low-precision data types (int8 and int4) instead of higher-precision data types (FP16 and FP32).

However, removing this precision from the model does result in some degradation in the accuracy of the model. Yet research indicates that, given a memory budget, the best LLM performance is achieved by using the largest possible model that will fit into memory when the parameters are stored in 4-bit precision. For more details, see The Case for 4-bit Precision: k-Bit Inference Scaling Laws.

As such, the Llama 2 70B model achieves its optimal balance of accuracy and memory usage when it is implemented in a 4-bit quantization, which reduces the RAM required to about 35 GB only. This memory requirement is within reach for smaller development teams or even individuals. It is easily met with the single NVIDIA RTX A6000 48 GB GPU that is optionally included with the IGX Orin.

Open-source LLMs open new development opportunities

With the ability to run state-of-the-art LLMs on commodity hardware, the open-source community has exploded with new libraries that support running locally and offer tools that extend the capabilities of these models beyond just predicting a sentence’s next word.

Libraries such as Llama.cpp, ExLlama, and AutoGPTQ enable you to quantize your own models and run blazing-fast inference on your local GPUs. Quantizing your own models is an entirely optional step, however, as the HuggingFace.co model repository is full of quantized models ready for use. This is thanks in large part to power users like /TheBloke, who upload newly quantized models daily.

While these models on their own offer exciting development opportunities, augmenting them with additional tools from a host of newly created libraries makes them all the more powerful. Examples include:

LangChain, a library with 58,000 GitHub stars that provides everything from vector database integration to enable document Q&A, to multi-step agent frameworks that enable LLMs to browse the web.

Haystack, which enables scalable semantic search.

Magentic, offering easy integration of LLMs into your Python code.

Oobabooga, a web UI for running quantized LLMs locally.

If you have an LLM use case, an open-source library is likely available that will provide most of what you need.

Get started deploying LLMs at the edge

Deploying cutting-edge LLMs at the edge with NVIDIA IGX Orin Developer Kit opens untapped development opportunities. To get started, check out the comprehensive tutorial detailing the creation of a simple chatbot application on IGX Orin, Deploying Llama 2 70B Model on the Edge with IGX Orin.

This tutorial illustrates the seamless integration of Llama 2 on IGX Orin, and guides you through developing a Python application using Gradio. This is the initial step toward harnessing any of the exceptional LLM libraries mentioned in this post. IGX Orin delivers resilience, unparalleled performance, and end-to-end security, empowering developers to forge innovative Holoscan-optimized applications around state-of-the-art LLMs operating at the edge.

On Friday, November 17, join the NVIDIA Deep Learning Institute for LLM Developer Day, a free virtual event delving into cutting-edge techniques in LLM application development. Register to access the event live or on demand.