AI technologies are having a massive impact across industries, including media and entertainment, automotive, customer service and more. For game developers, these advances are paving the way for creating more realistic and immersive in-game experiences.

From creating lifelike characters that convey emotions to transforming simple text into captivating imagery, foundation models are becoming essential in accelerating developer workflows while reducing overall costs. These powerful AI models have unlocked a realm of possibilities, empowering designers and game developers to build higher-quality gaming experiences.

What Are Foundation Models?

A foundation model is a neural network that’s trained on massive amounts of data — and then adapted to tackle a wide variety of tasks. They’re capable of enabling a range of general tasks, such as text, image and audio generation. Over the last year, the popularity and use of foundation models has rapidly increased, with hundreds now available.

For example, GPT-4 is a large multimodal model developed by OpenAI that can generate human-like text based on context and past conversations. Another, DALL-E 3, can create realistic images and artwork from a description written in natural language.

Powerful foundation models like NVIDIA NeMo and Edify model in NVIDIA Picasso make it easy for companies and developers to inject AI into their existing workflows. For example, using the NVIDIA NeMo framework, organizations can quickly train, customize and deploy generative AI models at scale. And using NVIDIA Picasso, teams can fine-tune pretrained Edify models with their own enterprise data to build custom products and services for generative AI images, videos, 3D assets, texture materials and 360 HDRi.

How Are Foundation Models Built?

Foundation models can be used as a base for AI systems that can perform multiple tasks. Organizations can easily and quickly use a large amount of unlabeled data to create their own foundation models.

The dataset should be as large and diverse as possible, as too little data or poor-quality data can lead to inaccuracies — sometimes called hallucinations — or cause finer details to go missing in generated outputs.

Next, the dataset must be prepared. This includes cleaning the data, removing errors and formatting it in such a way that the model can understand it. Bias is a pervasive issue when preparing a dataset, so it’s important to measure, reduce and tackle these inconsistencies and inaccuracies.

Training a foundational model can be time-consuming, especially given the size of the model and the amount of data required. Hardware like NVIDIA A100 or H100 Tensor Core GPUs, along with high-performance data systems like the NVIDIA DGX SuperPOD, can accelerate training. For example, ChatGPT-3 was trained on over 1,000 NVIDIA A100 GPUs over about 34 days.

The three requirements of a successful foundation model.

After training, the foundation model is evaluated on quality, diversity and speed. There are several methods for evaluating performance, for example:

Tools and frameworks that quantify how well the model predicts a sample of text

Metrics that compare generated outputs with one or more references and measure the similarities between them

Human evaluators who assess the quality of the generated output on various criteria

Once the model passes the relevant tests and evaluations, it can then be deployed for production.

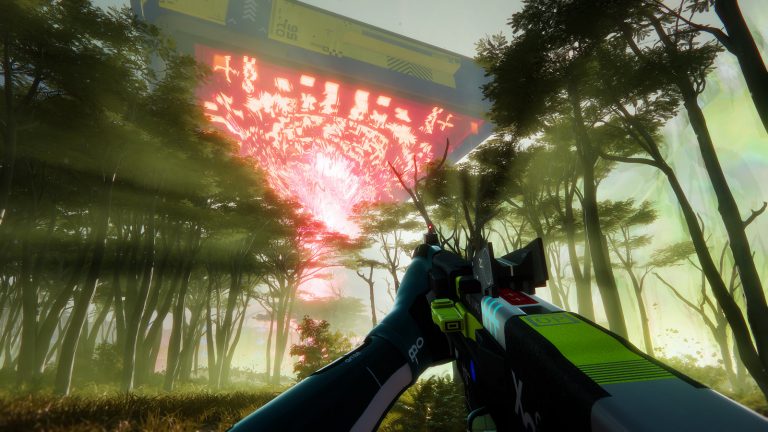

Exploring Foundation Models in Games

Pretrained foundation models can be leveraged by middleware, tools and game developers both during production and at run-time. To train a base model, resources and time are necessary — alongside a certain level of expertise. Currently, many developers within the gaming industry are exploring off-the-shelf models, but need custom solutions that fit their specific use cases. They need models that are trained on commercially safe data and optimized for real-time performance — without exorbitant costs of deployment. The difficulty of meeting these requirements has slowed adoption of foundation models.

However, innovation within the generative AI space is swift, and once major hurdles are addressed, developers of all sizes — from startups to AAA studios — will use foundation models to gain new efficiencies in game development and accelerate content creation. Additionally, these models can help create completely new gameplay experiences.

The top industry use cases are centered around intelligent agents and AI-powered animation and asset creation. For example, many creators today are exploring models for creating intelligent non-playable characters, or NPCs.

Custom LLMs fine-tuned with the lingo and lore of specific games can generate human-like text, understand context and respond to prompts in a coherent manner. They’re designed to learn patterns and language structures and understand game state changes — evolving and progressing alongside the player in the game.

As NPCs become increasingly dynamic,real-time animation and audio that sync with their responses will be needed. Developers are using NVIDIA Riva to create expressive character voices using speech and translation AI. And designers are tapping NVIDIA Audio2Face for AI-powered facial animations.

Foundation models are also being used for asset and animation generation. Asset creation during the pre-production and production phases of game development can be time-consuming, tedious and expensive.

With state-of-the-art diffusion models, developers can iterate more quickly, freeing up time to spend on the most important aspects of the content pipeline, such as developing higher-quality assets and iterating. The ability to fine-tune these models from a studio’s own repository of data ensures the outputs generated are similar to the art styles and designs of their previous games.

Foundation models are readily available, and the gaming industry is only in the beginning phases of understanding their full capabilities. Various solutions have been built for real-time experiences, but the use cases are limited. Fortunately, developers can easily access models and microservices through cloud APIs today and explore how AI can affect their games and scale their solutions to more customers and devices than ever before.

The Future of Foundation Models in Gaming

Foundation models are poised to help developers realize the future of gaming. Diffusion models and large language models are becoming much more lightweight as developers look to run them natively on a range of hardware power profiles, including PCs, consoles and mobile devices.

The accuracy and quality of these models will only continue to improve as developers look to generate high-quality assets that need little to no touching up before being dropped into an AAA gaming experience.

Foundation models will also be used in areas that have been challenging for developers to overcome with traditional technology. For example, autonomous agents can help analyze and detect world space during game development, which will accelerate processes for quality assurance.

The rise of multimodal foundation models, which can ingest a mix of text, image, audio and other inputs simultaneously, will further enhance player interactions with intelligent NPCs and other game systems. Also, developers can use additional input types to improve creativity and enhance the quality of generated assets during production.

Multimodal models also show great promise in improving the animation of real-time characters, one of the most time-intensive and expensive processes of game development. They may be able to help make characters’ locomotion identical to real-life actors, infuse style and feel from a range of inputs, and ease the rigging process.

Learn More About Foundation Models in Gaming

From enhancing dialogue and generating 3D content to creating interactive gameplay, foundation models have opened up new opportunities for developers to forge the future of gaming experiences.

Learn more about foundation models and other technologies powering game development workflows.