This is part of a series on Differentiable Slang. For more information about differential programming and automatic gradient computation in the Slang language,…

This is part of a series on Differentiable Slang. For more information about differential programming and automatic gradient computation in the Slang language, see Differentiable Slang: A Shading Language for Renderers That Learn.

Differentiable Slang easily integrates with existing codebases—from Python, PyTorch, and CUDA to HLSL—to aid multiple computer graphics tasks and enable novel data-driven and neural research. In this post, we introduce several code examples using differentiable Slang to demonstrate the potential use across different rendering applications and the ease of integration.

Example application: Appearance-based BRDF optimization

One of the basic building blocks in computer graphics is BRDF texture maps representing multiple properties of materials and describing how the light interacts with the rendered surfaces. Artists author and preview textures, but then rendering algorithms transform them automatically, for instance, filtering, blending the BRDF properties, or creating mipmaps.

Rendering is highly nonlinear, so linear operations on texture maps do not produce the correct linearly changing appearance. Various models were proposed to preserve appearance in applications like mipmap chain creation. Those models are approximate and often created only for a specific BRDF; new ones must be designed when rendering changes.

Instead of refining those models, we propose to use differentiable rendering and a data-driven approach to build appearance-preserving mipmaps. For more information and code examples, see the /shader-slang GitHub repo.

In Figure 1, the left column shows the surface rendered with a naively downsampled material. The middle column shows the same surface rendered with a low-resolution material obtained from an optimization algorithm implemented using Slang’s automatic differentiation feature. The right column shows the rendering result using the reference material without downsampling. The rendering using the optimized material preserves more details than using the naively downsampled material and matches much closer to the reference material.

To demonstrate Slang’s flexibility and compatibility with multiple existing frameworks, we write the optimization loop in PyTorch in a Jupyter notebook, enabling easy visualization, interactive debugging, and Markdown documentation of the code. The shading code is written in Slang, which will look familiar to graphics programmers. Easy Slang, Python, PyTorch, and Jupyter interoperability enable you to choose the best combination of languages for data-driven graphics development.

Example application: Texture compression

Texture compression is an optimization task that significantly reduces the texture file size and memory usage while trying to preserve the image quality. There are many approaches to texture compression and many different compressors available, with the most popular being hardware block compression (BC). We demonstrate how we can use gradient descent to find a close-to-optimal solution for BC7 Mode 6 texture compression automatically with Slang automatic differentiation capabilities.

By using gradient descent, we don’t need to write the compression code explicitly. Slang automatically generates gradients of BC7 block color interpolation through backward differentiation of the Mode 6 decoder:

float4 decodeTexel() {

return weight * maxEndPoint + (1 – weight) * minEndPoint;

}

To facilitate compression, we provide an effective initial guess, with endpoints initialized to the color space box’s corners enveloping a block and interpolation weights set to 0.5. We model the BC7 quantization and iteratively adjust endpoints and weights for each 4×4 block, ensuring minimal difference between the original textures and its compressed version.

This simple approach achieves a high compression quality, and for the best computational performance, we merge the forward (decoding) and backward (encoding) passes into a single compute shader. Every thread works independently on a BC7 block, improving efficiency by retaining all data in registers and avoiding atomic operations to accumulate gradients. On an NVIDIA RTX 4090, this method can compress 400 4k textures every second, achieving a compression speed of 6.5 GTexel/s.

This example is written in Slang and the Python interface to the Falcor rendering infrastructure. For more information and code examples, see the /NVIDIAGameWorks/Falcor GitHub repo.

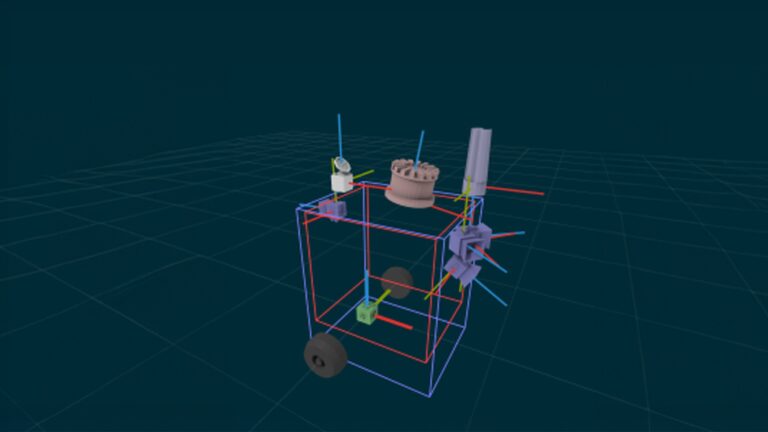

Example application: NVDIFFREC

Nvdiffrec is a large inverse rendering library for joint shape, material, and lighting optimization. Nvdiffrec allows the reconstruction of various scene properties from a series of 2D observations and can be used in various inverse rendering and appearance reconstruction applications.

Nvdiffrec is a large inverse rendering library for joint shape, material, and lighting optimization. Nvdiffrec reconstructs various scene properties from a series of 2D observations and can be used in various inverse rendering and appearance reconstruction applications.

Originally, Nvdiffrec’s performance-critical operations were accelerated using PyTorch extensions built with hand-differentiated CUDA kernels. The CUDA kernels perform the following tasks:

Loss computation (log-sRGB mapping and warp-wide reduction)

Tangent space normal mapping

Vertex transforms (multiplication of a vertex array with a batch of 4×4 matrices)

Cube map pre-filtering (for the split-sum shading model)

Slang generates automatically-differentiated CUDA kernels that achieve the same performance as the handwritten, manually-differentiated CUDA code. This reduces the number of lines of code considerably while staying compatible and interoperable with other CUDA kernels. Slang makes the code easier to maintain, extend, and connect to existing rendering pipelines and shading models.

For more information about the Slang version of nvdiffrec, see the /NVlabs/nvdiffrec GitHub repo.

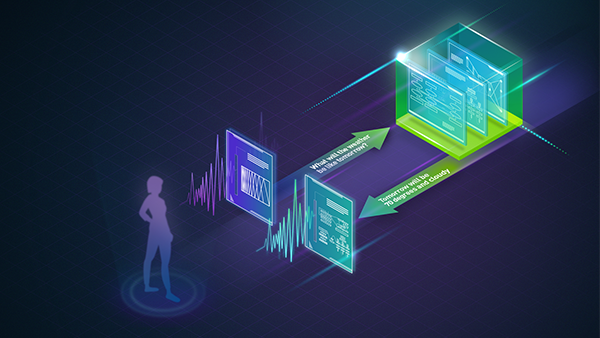

Example application: Differentiable path tracers

We converted a traditional, real-time path tracer into a differentiable path tracer, reusing over 5K lines of Slang code. The following are two different inverse path tracing examples in Slang:

Inverse-rendering optimization solving for material parameters via a differentiable path tracer

Differentiable path tracer with warped-area sampling for differential geometry

Initial set of material parameters

Optimized parameters

Reference

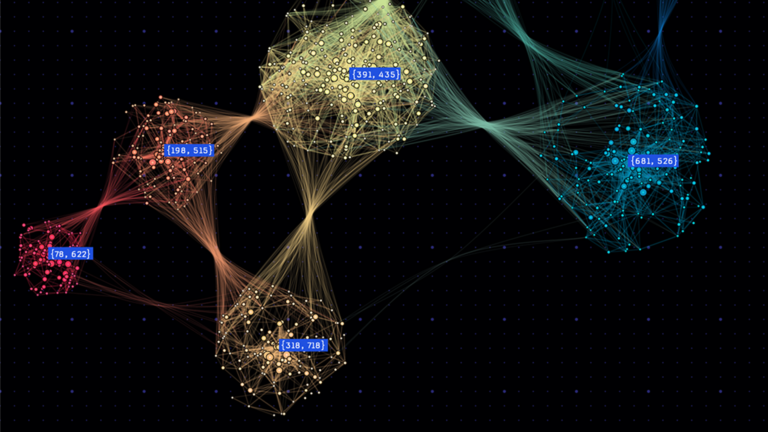

Figure 3. Inverse-rendering result that optimizes thousands of material parameters simultaneously

Conclusion

For more information, see the SLANG.D: Fast, Modular and Differentiable Shader Programming paper and begin exploring differentiable rendering with Slang.