Another GTC in the books! As usual, there were a lot of exciting announcements. There is also an overwhelming amount of information to go through. While I watched many of the sessions, and certainly most of the Jetson related ones I could find, this isn’t nearly an exhaustive list of what’s going on. The Jetson take aways are mostly targeted this year at the professional developer crowd. That’s not surprising, as the Jetson Xavier has been around for 4 years now.

Here I share some thoughts here so you can make mean comments about them. Not too mean though, I’m sensitive.

Jetson ORIN Available, Module Specs and Pricing

As expected, NVIDIA announced that the Jetson AGX Orin Development Kits are now available at a price of $1999. An unexpected Covid lockdown in China right before GTC threw a little wrench into this, but this delay should clear up soon. As usual, the Jetson faithful were understanding about the delay as they sharpened their pitchforks and lit torches marching through the village.

When announced last year, the AGX Orin performance was listed at ~200 TOPS. Apparently someone turned up a dial and performance is now ~275 TOPS on the shipping units.

Pricing and configurations for the Jetson Orin modules was also announced. The AGX Orin modules will be available in October, 2022. The NX Orin modules later in Q4. Pricing starts at $399 USD for the NX modules.

Jetson Nova Orin

Autonomous mobile robots (AMRs) is one of the areas that NVIDIA is purpose building hardware solutions. One use of these robots is in warehouse systems. There will be a reference design that utilizes an Isaac Nova Orin compute platform. It consists of two Jetson Orin modules, with performance of ~550 TOPS. This is similar in concept to the NVIDIA Drive systems computationally. To quote about sensors on the rest of the system:

These modules process data in real time from the AMR’s central nervous system — essentially the sensor suite comprising up to six cameras, three lidars and eight ultrasonic sensors.

Here’s the basic idea:

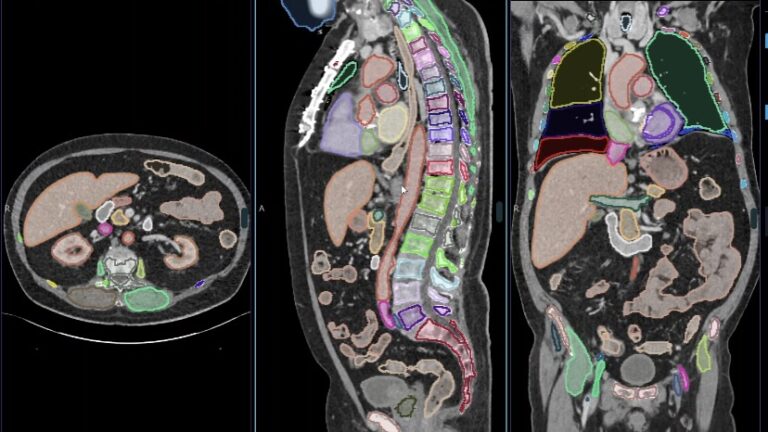

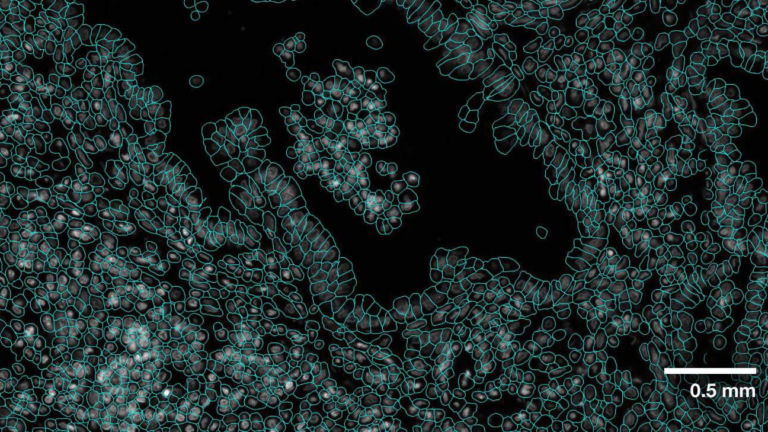

Leverage NVIDIAs Work

As a Jetson developer there are several messages to heed. First, NVIDIA has put an enormous amount of time, energy, effort and money into building their AI ecosystem. One of the byproducts of this work is the availability of pre-trained deep learning models. To be clear, these models are far beyond what non specialists can train. NVIDIA uses their super computer centers using enormous amounts of data to train the models. Plus the models are free to use! You can modify the models to fit your use case using the TAO toolkit. Train in the cloud or the desktop, deploy on Jetson for inferencing is the way NVIDIA views it.

Second, get serious about simulation. Isaac Sim leverages Omniverse. There are different ways to look at this idea. For example, you can do a virtual “build” of your robot in Isaac, using different pre-built blocks. NVIDIA is going all in with the Open Source Robotics Foundation (OSRF) to support ROS. Take advantage of it.

One of the fun talks at GTC was from Raffaello Bonghi about using ROS2 and Isaac ROS on the NVIDIA Jetson dinosaur robot. Nanosaur uses a Jetson Nano.

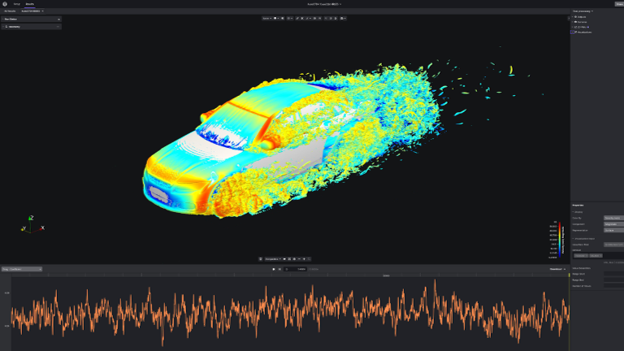

Of course, simulation isn’t limited to just robotics. Whatever your market/niche, look at putting simulation in the loop.

Here’s a nice chat (not from GTC) with NVIDIA’s Danny Shapiro about integrating these concepts together for use in automobiles that explains and ties together this End to End idea. I found it really interesting:

These are what I call “big picture” ideas. You can leverage your Jetson development using tools that are in the cloud or running on a PC desktop.

JetPack 5.0

But not all Jetson development is about that. With the introduction of JetPack 5.0, NVIDIA is porting the NSight tools over to run natively on the Jetson. That means that you can debug and profile CUDA code directly on the device. JetPack 5.0 runs an Ubuntu 20.04 variant, with kernel 5.10. Also, JetPack 5.0 supports Jetson Xavier and Jetson Orin devices. Earlier Jetsons will not run JetPack 5.0.

An Orin has desktop like performance. So it makes sense that a lot of people will feel comfortable developing on device, rather than having to do the whole PC/Jetson development juggle.

Docker

With that said, here’s another thing to leverage. Containers. The Jetsons use Docker as their container system. While NVIDIA has built containers over the last couple of years for the Jetson, don’t think of this as a novelty. Use them. It makes deployment and maintenance much easier. By using containers, you generally get rid of many of the headaches of installing complicated apps, such as dependency hell.

Long term, as the more powerful Jetson architectures migrate through out the entire Jetson range, you will be able to run multiple models concurrently. The Xaviers and Orins can do that now. More CPU cores, more memory, more CUDA cores means more. More means more in this context.

The Future

As usual, it’s also fun to speculate as to what the future may provide in the Jetson world. We know that the next Jetson Nano is coming early next year. It’s hard to tell what it might be right now, other than lots of fun.

However, NVIDIA announced their next GPU data center architecture, called Hopper. Hopper will probably be called Lovelace on the consumer side. The Ampere architecture of the Orin is 8nm, Hopper appears to be 4! So, what does that mean? The Hopper chips have ~ 80 Billion transistors. In comparison, Orins have 13 Billion. You figure the Jetsons will have fewer transistors than the Hoppers, but still should have a hefty boost over the current Orins. More transistors, closer together means better performance. It will be interesting to see the roadmap for this, 2025 perhaps? Always something to look forward to. Here’s Hopper looking all purty.