As quantum computers scale, they will integrate with AI supercomputers to tackle some of the world’s most challenging problems. These accelerated quantum... As quantum computers...

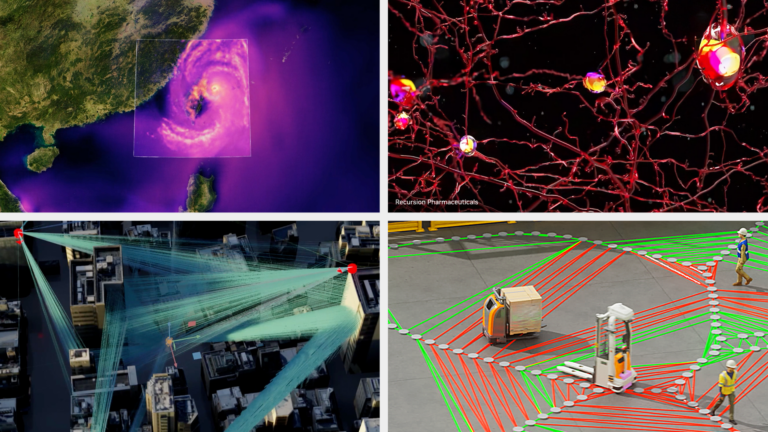

How Modern Supercomputers Powered by NVIDIA Are Pushing the Limits of Speed — and Science

Modern high-performance computing (HPC) is enabling more than just quick calculations — it’s powering AI systems that are unlocking scientific... Modern high-performance computing (HPC) is...

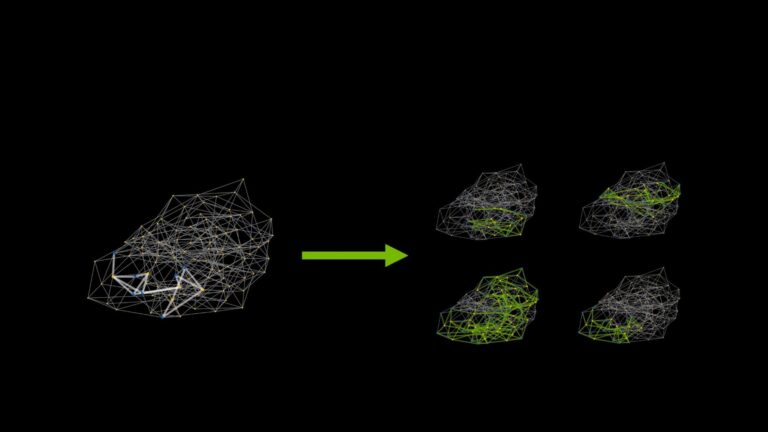

A Fine-tuning–Free Approach for Rapidly Recovering LLM Compression Errors with EoRA

Model compression techniques have been extensively explored to reduce the computational resource demands of serving large language models (LLMs) or other... Model compression techniques have...

AI Helps Locate Dangerous Fishing Nets Lost at Sea

Conservationists have launched a new AI tool that can sift through petabytes of underwater imaging from anywhere in the world to identify signs of abandoned...

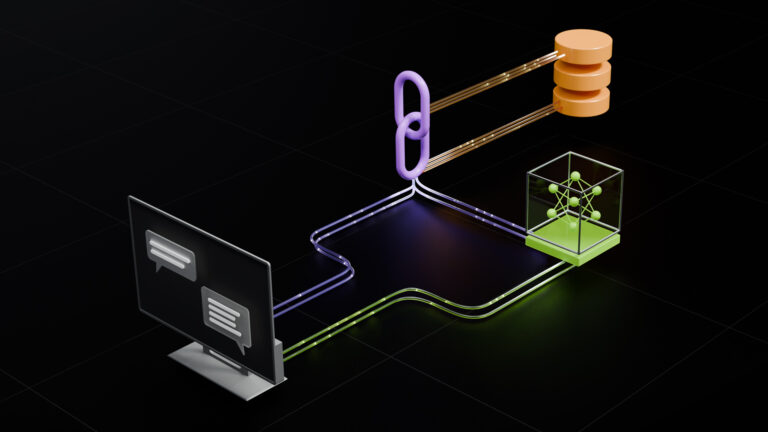

How NVIDIA GB200 NVL72 and NVIDIA Dynamo Boost Inference Performance for MoE Models

The latest wave of open source large language models (LLMs), like DeepSeek R1, Llama 4, and Qwen3, have embraced Mixture of Experts (MoE) architectures. Unlike......