Scientists and engineers who design and build unique scientific research facilities face similar challenges. These include managing massive data rates that... Scientists and engineers who...

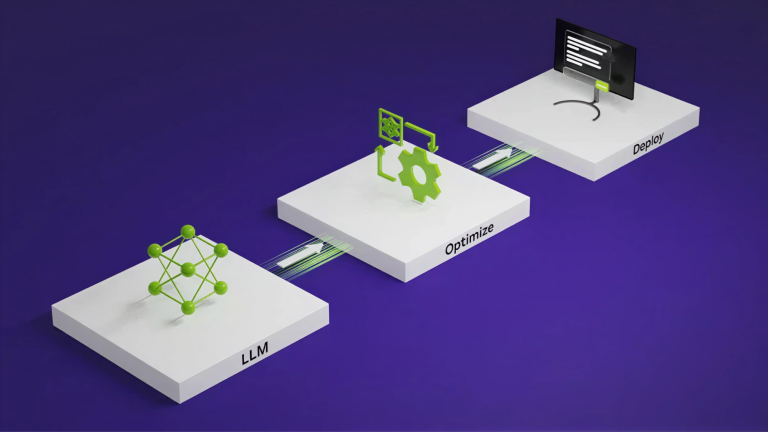

Automating Inference Optimizations with NVIDIA TensorRT LLM AutoDeploy

NVIDIA TensorRT LLM enables developers to build high-performance inference engines for large language models (LLMs), but deploying a new architecture... NVIDIA TensorRT LLM enables developers...

3 Ways NVFP4 Accelerates AI Training and Inference

The latest AI models continue to grow in size and complexity, demanding increasing amounts of compute performance for training and inference—far beyond what... The latest...

How to Build License-Compliant Synthetic Data Pipelines for AI Model Distillation

Specialized AI models are built to perform specific tasks or solve particular problems. But if you’ve ever tried to fine-tune or distill a domain-specific... Specialized...

How Painkiller RTX Uses Generative AI to Modernize Game Assets at Scale

Painkiller RTX sets a new standard for how small teams can balance massive visual ambition with limited resources by integrating generative AI. By upscaling... Painkiller...