NetworkX is a popular, easy-to-use Python library for graph analytics. However, its performance and scalability may be unsatisfactory for medium-to-large-sized... NetworkX is a popular, easy-to-use...

Hands-On Training at NVIDIA AI Summit in Washington, DC

Immerse yourself in NVIDIA technology with our full-day, hands-on technical workshops at our AI Summit in Washington D.C. on October 7, 2024. Immerse yourself in...

NVIDIA Deep Learning Institute Releases New Generative AI Teaching Kit

Generative AI, powered by advanced machine learning models and deep neural networks, is revolutionizing industries by generating novel content and driving... Generative AI, powered by...

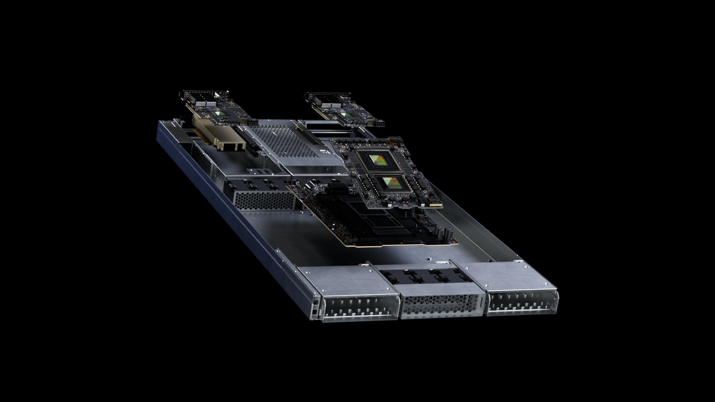

Real-Time Neural Receivers Drive AI-RAN Innovation

Today’s 5G New Radio (5G NR) wireless communication systems rely on highly optimized signal processing algorithms to reconstruct transmitted messages from... Today’s 5G New Radio...

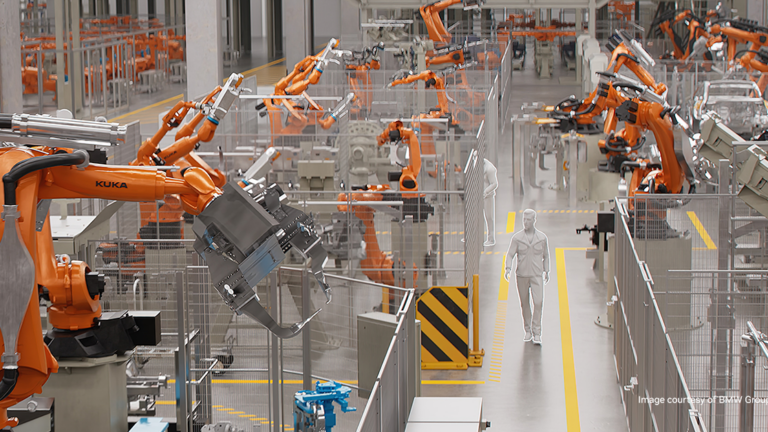

Accelerating Predictive Maintenance in Manufacturing with RAPIDS AI

The International Society of Automation (ISA) reports that 5% of plant production is lost annually due to downtime. Putting that into a different context,... The...