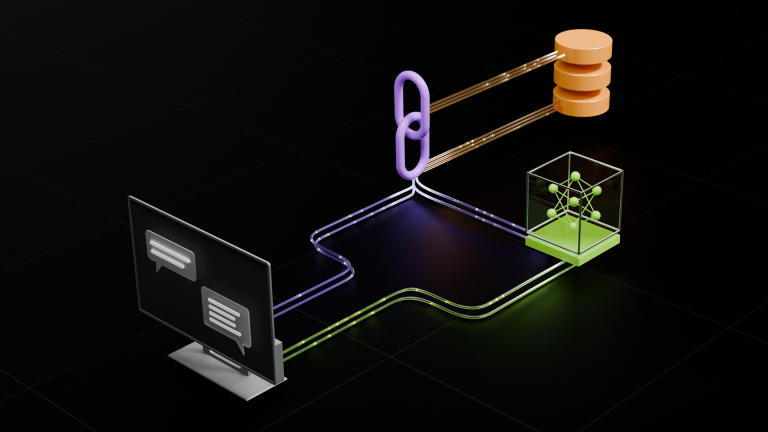

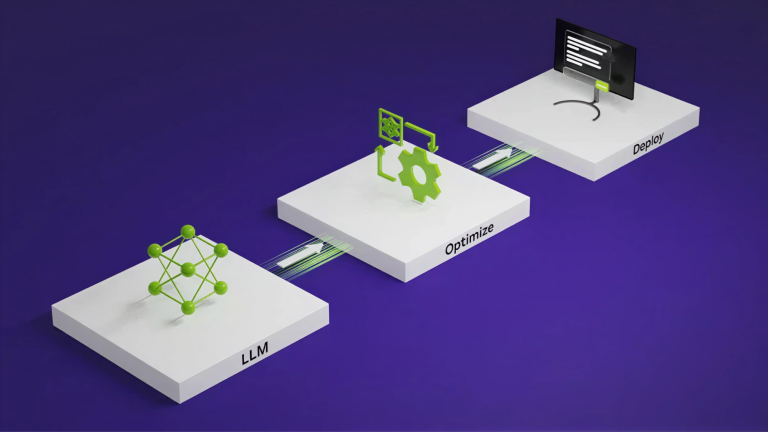

As global AI adoption accelerates, developers face a growing challenge: delivering large language model (LLM) performance that meets real-world latency and cost…

As global AI adoption accelerates, developers face a growing challenge: delivering large language model (LLM) performance that meets real-world latency and cost requirements. Running models with tens of billions of parameters in production, especially for conversational or voice-based AI agents, demands high throughput, low latency, and predictable service-level performance.