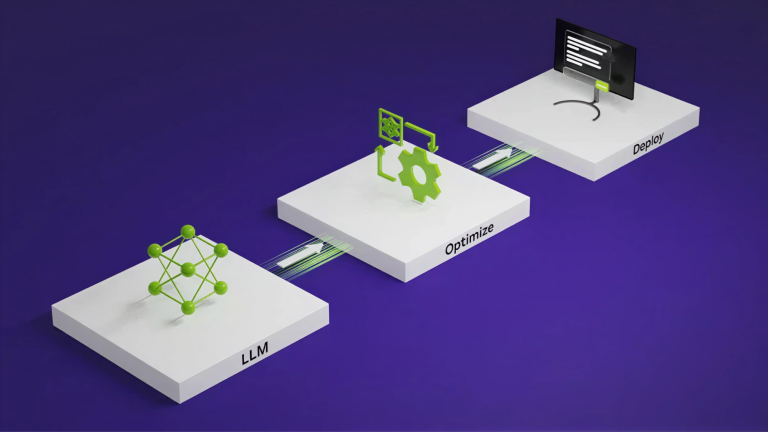

Generative AI and large language models (LLMs) are changing human-computer interaction as we know it. Many use cases would benefit from running LLMs locally on…

Generative AI and large language models (LLMs) are changing human-computer interaction as we know it. Many use cases would benefit from running LLMs locally on Windows PCs, including gaming, creativity, productivity, and developer experiences. This post discusses several NVIDIA end-to-end developer tools for creating and deploying both text-based and visual LLM applications on NVIDIA RTX AI-ready PCs.

Developer tools for building text-based generative AI projects

NVIDIA TensorRT-LLM is an open-source large language model (LLM) inference library. It provides an easy-to-use Python API to define LLMs and build TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs. NVIDIA TensorRT-LLM also contains components to create Python and C++ runtimes to run inference with the generated TensorRT engines.

To get started with TensorRT-LLM, visit the NVIDIA/TensorRT-LLM GitHub repo. Check out the TensorRT-LLM for Windows developer environment setup details.

In desktop applications, model quantization is crucial for compatibility with PC GPUs, which often have limited VRAM. TensorRT-LLM facilitates this process through its support for model quantization, enabling models to occupy a smaller memory footprint with the help of the TensorRT-LLM Quantization Toolkit.

To start exploring post-training quantization using TensorRT-LLM Quantization Toolkit, see the TensorRT-LLM Quantization Toolkit Installation Guide on GitHub.

Model compatibility and pre-optimized models

TensorRT-LLM provides the capability to define models through its Python API and is pre-equipped to support a diverse range of LLMs. Quantized model weights are available, specifically optimized for NVIDIA RTX PCs on NVIDIA GPU Cloud (NGC), enabling rapid deployment of these models.

Model NameModel LocationLlama 2 7B – Int4-AWQDownloadLlama 2 13B – Int4-AWQDownloadCode Llama 13B – Int4-AWQDownloadMistral 7B – Int4-AWQDownloadTable 1. Pre-optimized text-based LLMs that run on Windows PC for NVIDIA RTX with the NVIDIA TensorRT-LLM backend

You can also build TensorRT engines for a wide variety of models supported by TensorRT-LLM. Visit TensorRT-LLM/examples on GitHub to see all supported models.

Developer resources and reference applications

Check out these reference projects for more information:

TRT-LLM RAG on Windows: This repository demonstrates a retrieval-augmented generation (RAG) pipeline, using llama_index on Windows with Llama 2 13B – int4, TensorRT-LLM, and FAISS.

OpenAI API Spec Web Server: Drop-in replacement REST API compatible with OpenAI API spec using TensorRT-LLM as the inference backend.

Minimum system requirements

Supported GPU architectures for TensorRT-LLM include NVIDIA Ampere and above, with a minimum of 8GB RAM. It is suggested to use Windows 11 and above, for an optimal experience.

Developer tools for building visual generative AI projects

NVIDIA TensorRT SDK is a high-performance deep learning inference optimizer. It provides layer fusion, precision calibration, kernel auto-tuning, and other capabilities that significantly boost the efficiency and speed of deep learning models. This makes it indispensable for real-time applications and resource-intensive models like Stable Diffusion, substantially accelerating performance. Get started with NVIDIA TensorRT.

For broader guidance on how to integrate TensorRT into your applications, see Getting Started with NVIDIA AI for Your Applications. Learn how to profile your pipeline to pinpoint where optimization is critical and where minor changes can have a significant impact. Accelerate your AI pipeline by choosing a machine learning framework, and discover SDKs for video, graphic design, photography, and audio.

Developer demos and reference applications

Check out these resources for more information:

How to Optimize Models like Stable Diffusion with TensorRT: This demo notebook showcases the acceleration of Stable Diffusion inference pipeline using TensorRT through Hugging Face.

Example TRT Pipeline for Stable Diffusion: An example of how TensorRT can be used to accelerate the text-to-image Stable Diffusion inference pipeline.

TensorRT Extension for Stable Diffusion Web UI: A working example of TensorRT accelerating the most popular Stable Diffusion web UI.

Summary

Use the resources in this post to easily add generative AI capabilities to applications powered by the existing installed base of 100 million NVIDIA RTX PCs.

Share what you develop with the NVIDIA developer community by entering the NVIDIA Generative AI on NVIDIA RTX Developer Contest for a chance to win a GeForce RTX 4090 GPU, a full in-person NVIDIA GTC conference pass, and more.