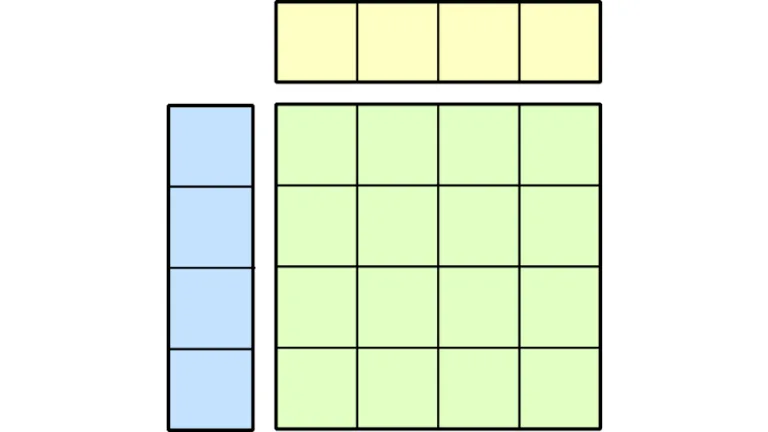

Transformer architecture has become a foundational breakthrough driving the revolution in generative AI, powering large language models (LLMs) like GPT,…

Transformer architecture has become a foundational breakthrough driving the revolution in generative AI, powering large language models (LLMs) like GPT, DeepSeek, and Llama. The key to transformer architecture is the self-attention mechanism, which enables models to process an entire input sequence rather than word by word. This parallelism enables the capture of long-range dependencies.