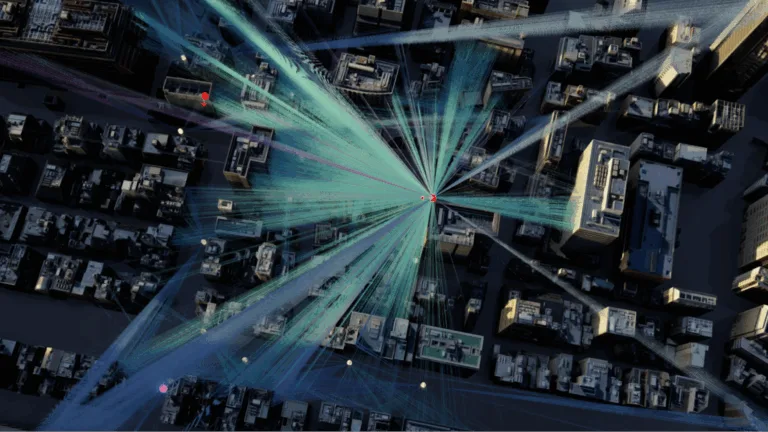

As AI models grow larger and more sophisticated, inference, the process by which a model generates responses, is becoming a major challenge. Large language…

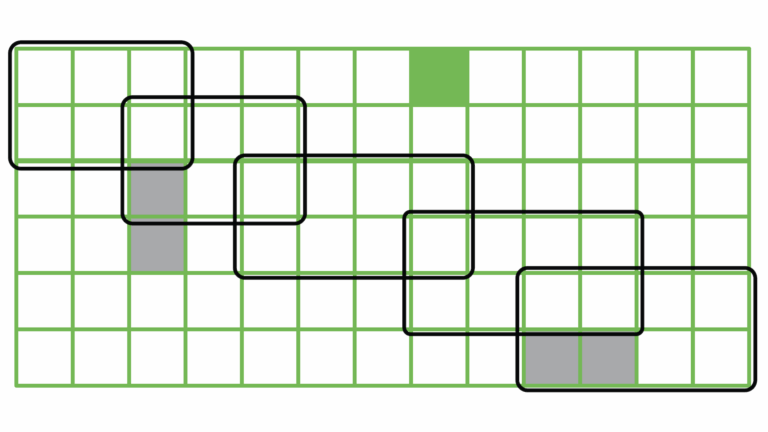

As AI models grow larger and more sophisticated, inference, the process by which a model generates responses, is becoming a major challenge. Large language models (LLMs) like GPT-OSS and DeepSeek-R1 rely heavily on attention data—the Key-Value (KV) Cache—to understand and contextualize input prompts, but managing this data efficiently is becoming increasingly difficult.