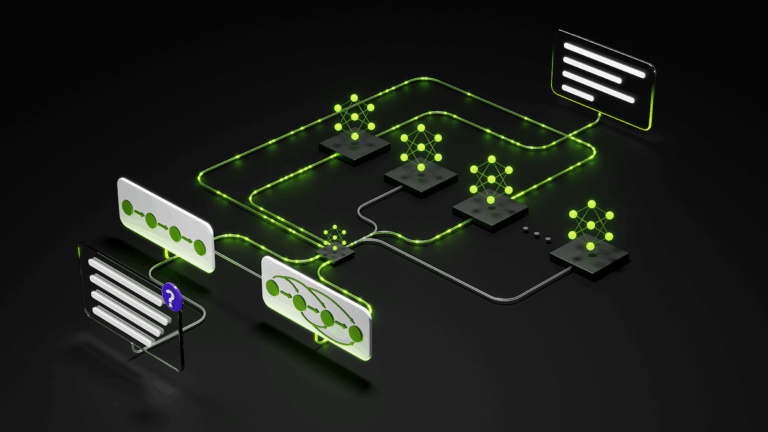

Integrating large language models (LLMs) into a production environment, where real users interact with them at scale, is the most important part of any AI…

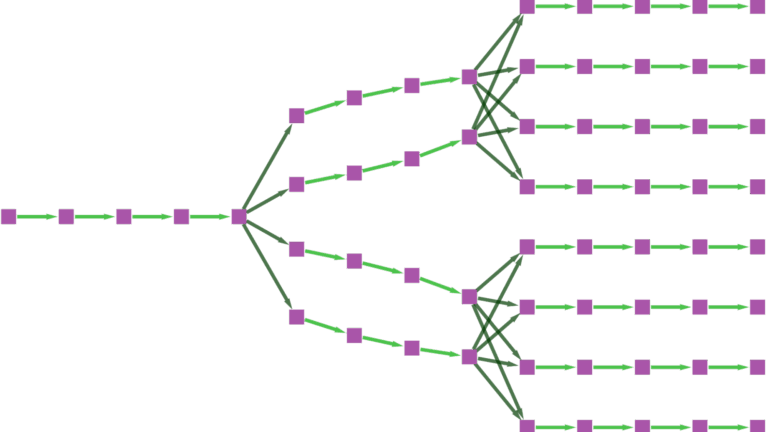

Integrating large language models (LLMs) into a production environment, where real users interact with them at scale, is the most important part of any AI workflow. It’s not just about getting the models to run—it’s about making them fast, easy to manage, and flexible enough to support different use cases and production needs. With a growing number of LLMs, each with its own architecture…