The new “In Practice” series takes an in depth look on how to work with the Jetson with developer tools and external hardware. In this article we are working with USB Cameras. Looky here:

In a previous article, “In Depth: Cameras” we discussed how digital cameras work. Here, we will go over how to integrate a USB camera with a Jetson Developer Kit.

Introduction

The NVIDIA Jetson Developer Kits support direct connect camera integration using two main methods. The first is to use a MIPI Camera Serial Interface (CSI). The MIPI Alliance is the name of the industry group that develops technical specifications for the mobile ecosystem. On Jetsons like the Nano, this might be a sensor module like the familiar Raspberry Pi V2 camera based on the Sony IMX219 Image Sensor.

The second type of camera is a camera that connects via a USB port, for example a webcam. In this article, we will discuss USB cameras.

Jetson Camera Architecture

At its core, the Jetsons use the Linux kernel module Video Four Linux (version 2) (V4L2). V4L2 is part of Linux, and not unique to the Jetsons. V4L2 does a wide variety of tasks, here we concentrate on video capture from cameras.

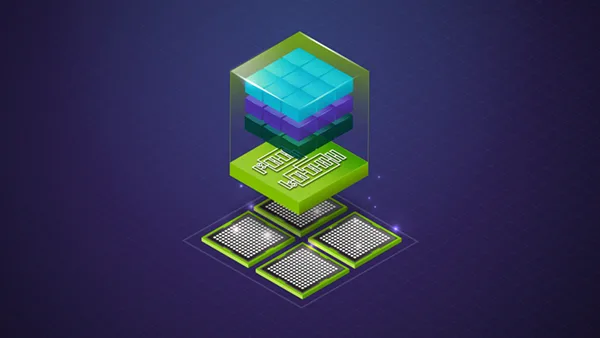

Here is the Jetson Camera Architecture Stack (from the NVIDIA Jetson documentation):

Camera Architecture Stack

CSI Input

As mentioned previously, there are two main ways to acquire video from cameras directly connected to the Jetson. The first, through the CSI ports, uses libargus which is a Jetson specific library that connects the camera sensor to the Tegra SoC Image Signal Processor (ISP). The ISP hardware does a wide variety of image processing tasks, such as debayering an image, manages white balance, contrast and so on. This is non-trivial, and requires special tools and expertise in order to produce high quality video. For more info: NVIDIA Jetson ISP Control Description from RidgeRun.

USB Input

The video from a USB camera takes a different route. The USB Video Class driver (uvcvideo) is a Linux kernel module. The uvcvideo module supports extension unit controls (XU controls) to V4l2 through mapping and a driver-specific ioctl interface. Input/Output Controls (ioctls) are the interface to the V4L2 API. For more info: The Linux USB Video Class (UVC) driver

What this means is that a driver in a USB camera device can specify different device dependent controls, without uvcvideo or V4l2 having to specifically understand them. The USB camera driver hands a code for a feature, along with a range of possible values, to uvcvideo. When the user specifies a feature value for the camera, uvcvideo checks the bounds of the argument and passes it to the camera along with the feature code. The camera then adjusts itself accordingly.

Because a USB camera does not interface directly with the ISP, there is a more direct path to user space. Here’s a diagram:

The term user space refers to where users have access to the cameras in their applications.

Outside the USB Box

This is one path to get USB camera video into the Jetson. Not all camera manufacturers provide this type of implementation. Most “plug and play” webcams do, as well as many mature “specialty” cameras. Let’s define here that specialty are cameras with special or multiple sensors. Many thermal cameras provide this capability, as well as depth cameras like the Stereolabs ZED camera and Intel RealSense cameras.

However, some specialty cameras may have limited access to their feature set through V4L2, or may have a stand alone SDK. After all, it’s simpler to have a stand alone SDK that simply reads from a given USB serial port and not have to deal with going through the kernel interface. People then interface with the camera through the SDK. We are not discussing those types of cameras here.

Camera Capabilities

Camera capabilities describe many aspects of how a camera operates. This includes many features including pixel formats, available frame sizes, and frame rates (frames per second). You can also examine different parameters that control the camera operation, such as brightness, saturation, contrast and so on.

There is a command line tool which you can use to examine camera capabilities named v4l2-ctl. As shown in the video, to install v4l2-ctl install the v4l-utils debian package:

$ sudo apt install v4l-utilsHere are some useful commands:

# List attached devices

$ v4l2-ctl --list-devices

# List all info about a given device

$ v4l2-ctl --all -d /dev/videoX

# Where X is the device number. For example:

$ v4l2-ctl --all -d /dev/video0

# List the cameras pixel formats, images sizes, frame rates

$ v4l2-ctl --list-formats-ext -d /dev/videoXThere are many more commands, use the help command to get more information.

The video demonstrates a GUI wrapper around v4l2-ctl. The code is available in the JetsonHacks Github repository camera-caps. Please read the installation directions in the README file.

Interfacing with your Application

In the Jetson Camera Architecture, a V4L2 camera stream is available to an application in two different ways. The first way is to use the V4L2 interface directly using ioctl controls, or through a library that has a V4L2 backend. The second way is to use GStreamer which is a media processing framework.

ioctl

The most popular library on the Jetson for interfacing with V4L2 cameras via ioctl on the Jetson is the Github repository dusty-nv/jetson-utils. Dustin Franklin from NVIDIA has C/C++ wrapper Linux utilities for camera, codecs, GStreamer, CUDA and OpenCV in the repository. Check out the ‘camera‘ folders for samples on interfacing with V4L2 cameras via ioclt.

GStreamer

GStreamer is a major part of the Jetson Camera Architecture. The GStreamer architecture is extensible. NVIDIA implements DeepStream elements which when added to the GStreamer pipeline provide deep learning analysis of a video stream. NVIDIA calls this Intelligent Video Analytics.

GStreamer has tools which allows it to run as a stand alone application. There are also libraries which allow GStreamer to be part of an application. GStreamer can be integrated into an application in several ways, for example using OpenCV. For example, the popular Python library on Github NVIDIA-AI-IOT/jetcam uses OpenCV to help manage the camera and display.

OpenCV

OpenCV is a popular framework for building computer vision applications. Many people use OpenCV to handle camera input and display the video in a window. This can be done in just a few lines of code. OpenCV is also flexible. In the default Jetson distribution, OpenCV can use either a V4L2 (ioctl) interface or a GStreamer interface. Also, OpenCV can implement GTK+ or QT displays for graphics.

The simple camera applications shown in the video utilize OpenCV. The examples are on the JetsonHacks Github repository: USB-Camera. usb-camera-simple.py utilizes V4L2 camera input. usb-camera-gst.py utilizes GStreamer for its input interface.

One note here, if you are using a different version of OpenCV than the default Jetson distribution, then you will need to make sure that have the appropriate libraries linked in, such as V4L2 and GStreamer.

Looking around Github, you should be able to find other Jetson camera helpers. It’s always fun to take a look around and see what others are doing!

USB Notes

As mentioned in the video, the USB bandwidth for the Jetson may not match your expectations. For example on the Jetson Nano and Xavier NX, there are 4 USB 3 ports. However, the USB ports are connected to one hub internally on the Jetson. This means that you are limited to the speed of the hub, which is less than 4 super speed USB ports.

Also, in order to conserve power the Jetson implements what is called ‘USB autosuspend‘. This powers down a USB port when not in use. Most USB cameras handle this correctly and do not let the USB port autosuspend. However, occasionally you may encounter a camera that does not do this.

You will usually encounter this behavior when everything appears to be working correctly for a little while, and then just stops. It’s a little baffling if you don’t know what’s going on.

Fortunately, you can turn off USB autosuspend. This is a little bit different on different OS releases and Jetson types. You should be able to search the Jetson forums for how to control this for your particular configuration.

Conclusion

Hopefully this is enough information to get you started. This area is one of the most important areas of Jetson development. This should be enough information to get you asking the right questions for advanced development.