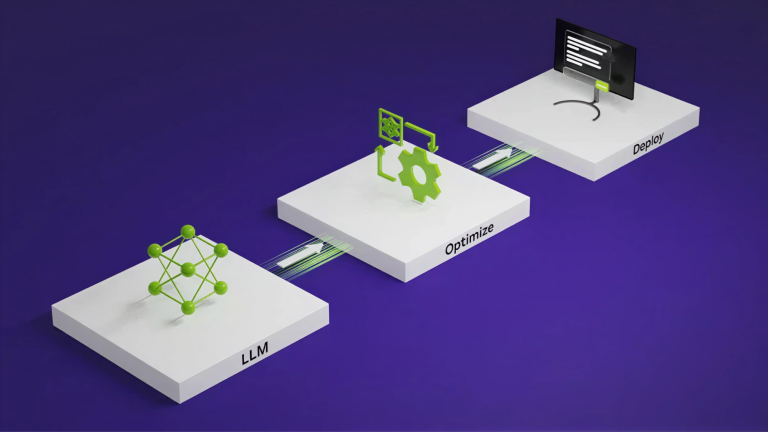

Reinforcement learning from human feedback (RLHF) is essential for developing AI systems that are aligned with human values and preferences. RLHF enables the…

Reinforcement learning from human feedback (RLHF) is essential for developing AI systems that are aligned with human values and preferences. RLHF enables the most capable LLMs, including ChatGPT, Claude, and Nemotron families, to generate exceptional responses. By integrating human feedback into the training process, RLHF enables models to learn more nuanced behaviors and make decisions that…