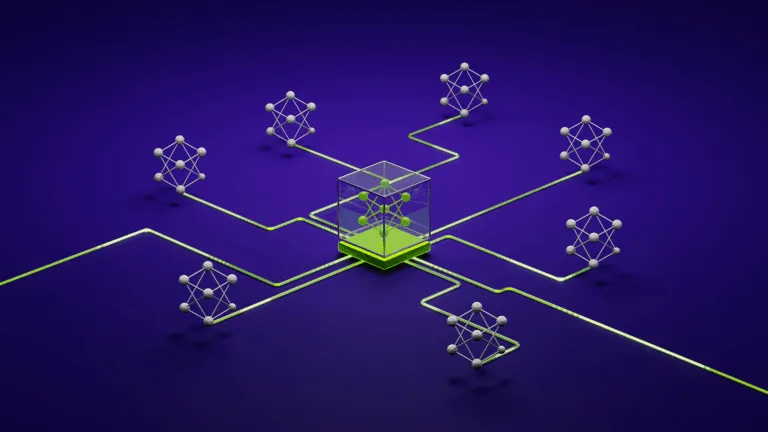

Vision-language models (VLMs) combine the powerful language understanding of foundational LLMs with the vision capabilities of vision transformers (ViTs) by…

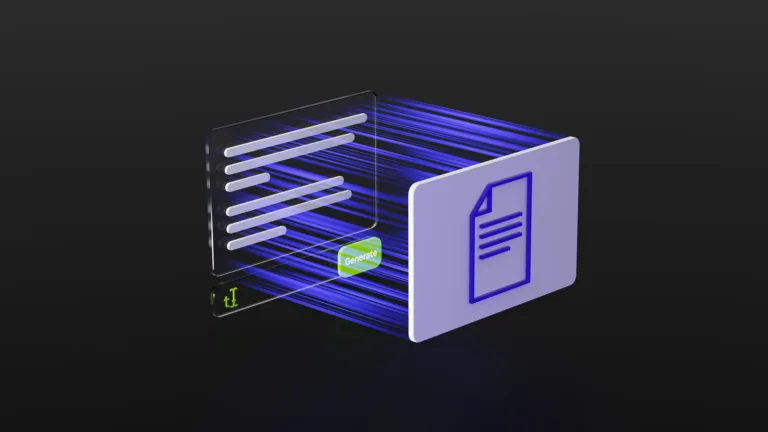

Vision-language models (VLMs) combine the powerful language understanding of foundational LLMs with the vision capabilities of vision transformers (ViTs) by projecting text and images into the same embedding space. They can take unstructured multimodal data, reason over it, and return the output in a structured format. Building on a broad base of pretraining, they can be easily adapted for…