Graphics processing units (GPUs) are some of the largest chips made, and in just over a decade, the most powerful graphics processors have gone from comprising a few billion transistors to over 100. But even those figures will be tiny compared to what lies ahead in the future, according to TSMC, and it’s explained how it plans to continue making them ever bigger. Billions? Uh uh—how about a cool one trillion transistors?

Taiwan Semiconductor Manufacturing Company (TSMC) is the largest manufacturer of graphics processing units in the world. AMD, Intel, Nvidia, Qualcomm, Tesla, and others are all developing ever larger and more powerful chips. With the likes of Nvidia’s recent Blackwell B200, we’re already ready past the 100 billion transistor mark and GPUs of the future will need to have far more than this to keep improving the performance and capabilities.

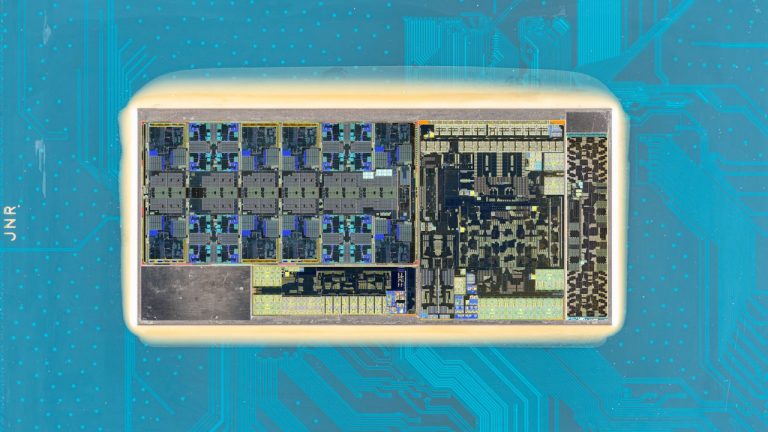

However, fabricating them is becoming increasingly more challenging, as certain elements (such as SRAM bit cells for cache) aren’t much smaller than they were five years ago. While logic circuits can still be made smaller, the overall size of the chip dies will just end up getting larger due to the constraints in the other areas. Unfortunately, the maximum size of a single die is pretty much at a limit, too, with the biggest area achievable being a little over 800 square millimetres.

This is known as the reticle limit and there’s no sign of this being hugely improved, without costs spiralling. But we’ve already seen the solution to all of this and in an article for IEEE Spectrum, via The Register, Mark Liu (TSMC’s Chairman) and Professor H.-S. Philip Wong (TSMC’s Chief Scientist) explain how going down the route of chiplets/tile and 3D stacking will soon make 100 billion transistor GPUs look like nothing.

“The continuation of the trend of increasing transistor count will require multiple chips, interconnected with 2.5D or 3D integration, to perform the computation. The integration of multiple chips, either by CoWoS or SoIC and related advanced packaging technologies, allows for a much larger total transistor count per system than can be squeezed into a single chip. We forecast that within a decade a multichiplet GPU will have more than 1 trillion transistors.”

Take the aforementioned Blackwell B200—that GPU might look like a massive 208 billion transistor chip, but it’s actually two 104B dies sitting on a silicon interposer. It’s the same with AMD’s MI300X 153B superchip, with four compute dies all snuggled together. But these GPUs boast huge transistor counts by spreading outward; the leap to the trillion count will require chips to be stacked on top of each other.

(Image credit: Future)

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

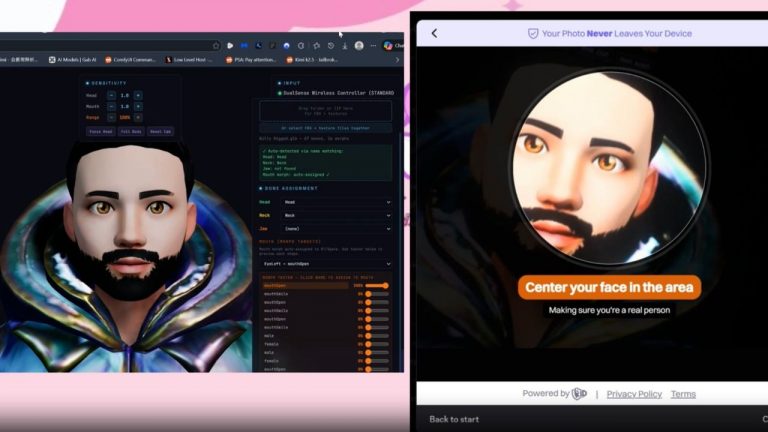

This is how AMD managed to significantly increase the amount of L3 cache in the likes of the Ryzen 7 7800X3D and other CPUs—by designing the base chiplet in such a way that it already has the connections required to fit another slice of silicon on top of it. Of course, stacking chips creates new problems, such as how to prevent heat from building up in the base layer, but TSMC sees a lot more scope for growth in this area: “We see no reason why the interconnect density can’t grow by an order of magnitude, and even beyond.”

While the transistors themselves might not get all that much smaller in the years to come, the chips themselves are clearly going to continue growing in size and complexity. Goodness knows what kind of processor graphics cards in 2034 could be packing, but I bet they’ll make today’s GPUs look basic as anything by comparison.