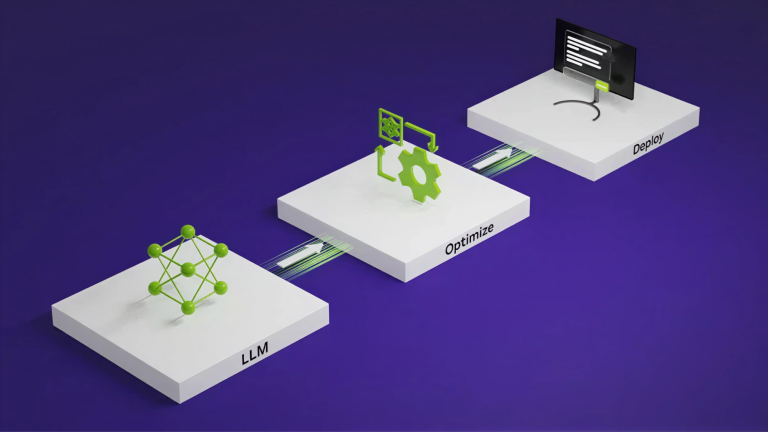

Deploying AI applications across diverse consumer hardware has traditionally forced a trade-off. You can optimize for specific GPU configurations and achieve…

Deploying AI applications across diverse consumer hardware has traditionally forced a trade-off. You can optimize for specific GPU configurations and achieve peak performance at the cost of portability. Alternatively, you can build generic, portable engines and leave performance on the table. Bridging this gap often requires manual tuning, multiple build targets, or accepting compromises.