As AI models continue to get smarter, people can rely on them for an expanding set of tasks. This leads users—from consumers to enterprises—to interact with…

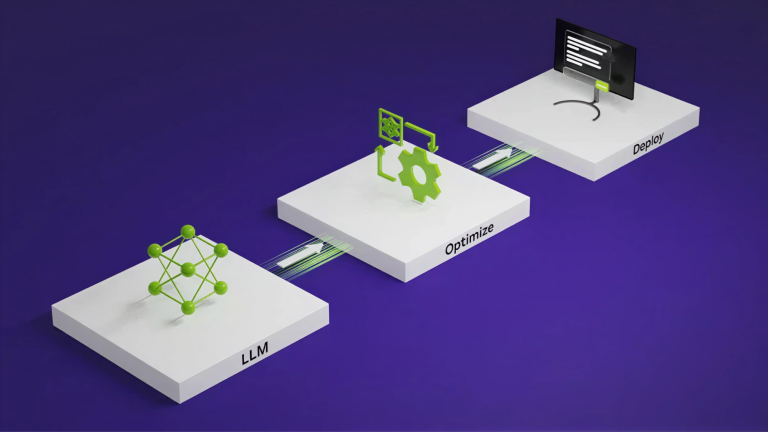

As AI models continue to get smarter, people can rely on them for an expanding set of tasks. This leads users—from consumers to enterprises—to interact with AI more frequently, meaning that more tokens need to be generated. To serve these tokens at the lowest possible cost, AI platforms need to deliver the best possible token throughput per watt. Through extreme co-design across GPUs, CPUs…