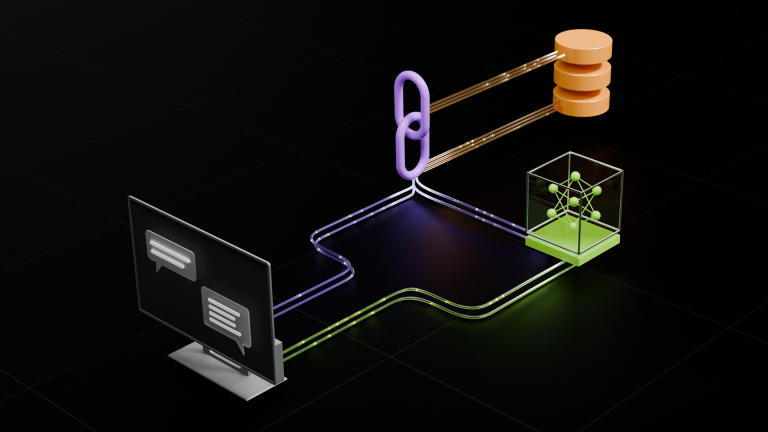

Large language models (LLMs) and multimodal reasoning systems are rapidly expanding beyond the data center. Automotive and robotics developers increasingly want…

Large language models (LLMs) and multimodal reasoning systems are rapidly expanding beyond the data center. Automotive and robotics developers increasingly want to run conversational AI agents, multimodal perception, and high-level planning directly on the vehicle or robot – where latency, reliability, and the ability to operate offline matter most. While many existing LLM and vision language…