As AI models grow larger and process longer sequences of text, efficiency becomes just as important as scale. To showcase what’s next, Alibaba released…

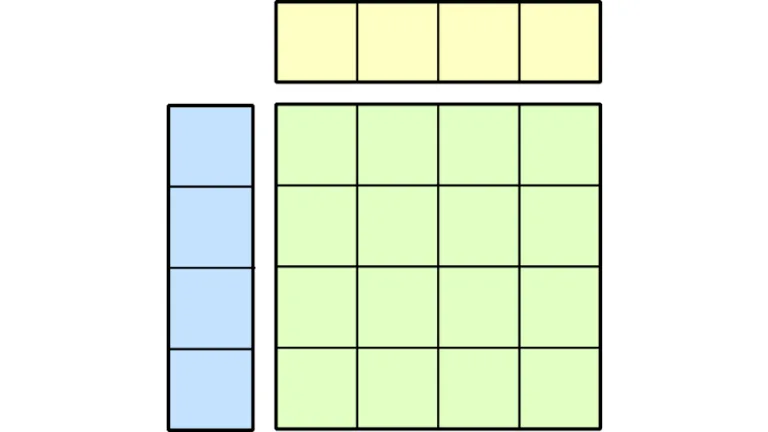

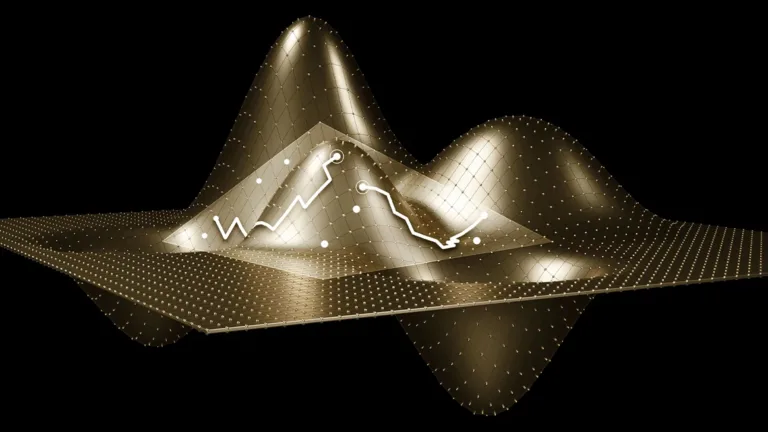

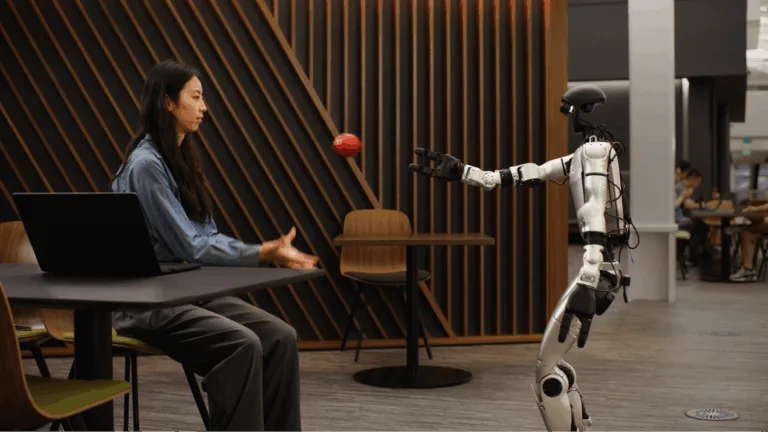

As AI models grow larger and process longer sequences of text, efficiency becomes just as important as scale. To showcase what’s next, Alibaba released two new open models, Qwen3-Next 80B-A3B-Thinking and Qwen3-Next 80B-A3B-Instruct to preview a new hybrid Mixture of Experts (MoE) architecture with the research and developer community. Qwen3-Next-80B-A3B-Thinking is now live on build.