The newest generation of the popular Llama AI models is here with Llama 4 Scout and Llama 4 Maverick. Accelerated by NVIDIA open-source software, they can…

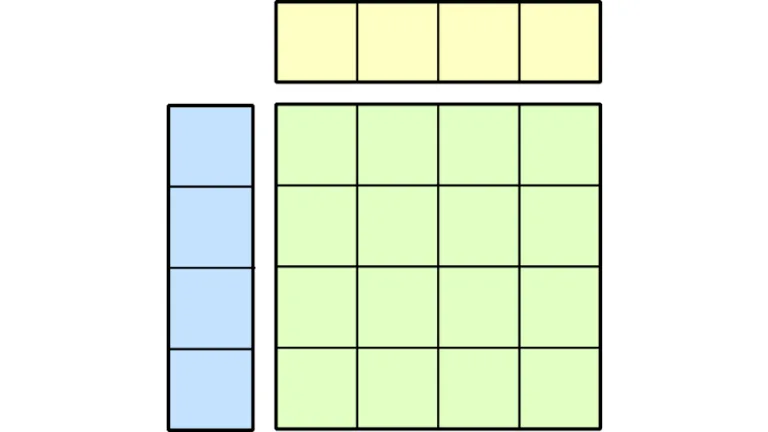

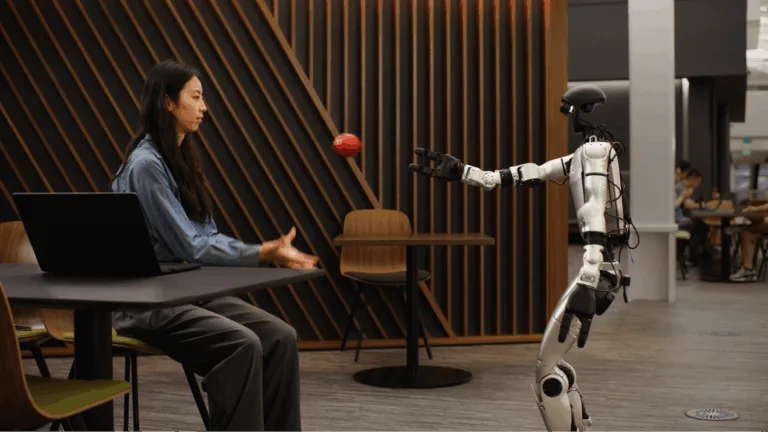

The newest generation of the popular Llama AI models is here with Llama 4 Scout and Llama 4 Maverick. Accelerated by NVIDIA open-source software, they can achieve over 40K output tokens per second on NVIDIA Blackwell B200 GPUs, and are available to try as NVIDIA NIM microservices. The Llama 4 models are now natively multimodal and multilingual using a mixture-of-experts (MoE) architecture.