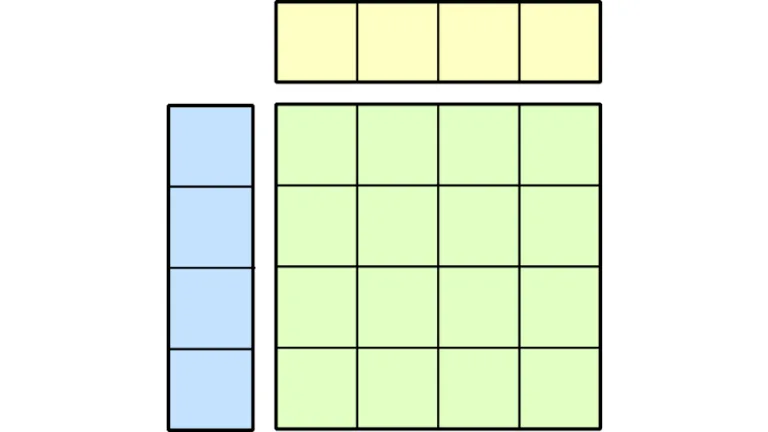

Model pruning and knowledge distillation are powerful cost-effective strategies for obtaining smaller language models from an initial larger sibling. …

Model pruning and knowledge distillation are powerful cost-effective strategies for obtaining smaller language models from an initial larger sibling. The How to Prune and Distill Llama-3.1 8B to an NVIDIA Llama-3.1-Minitron 4B Model post discussed the best practices of using large language models (LLMs) that combine depth, width, attention, and MLP pruning with knowledge distillation…