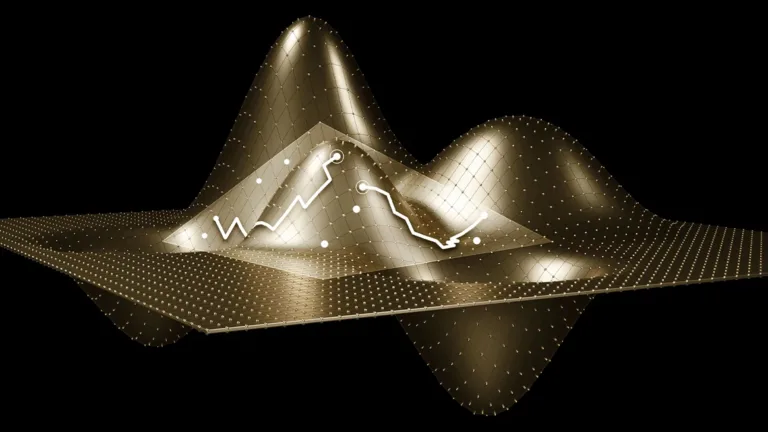

As AI models extend their capabilities to solve more sophisticated challenges, a new scaling law known as test-time scaling or inference-time scaling is…

As AI models extend their capabilities to solve more sophisticated challenges, a new scaling law known as test-time scaling or inference-time scaling is emerging. Also known as AI reasoning or long-thinking, this technique improves model performance by allocating additional computational resources during inference to evaluate multiple possible outcomes and then selecting the best one…