NVIDIA Cosmos, a platform for accelerating physical AI development, introduces a family of world foundation models — neural networks that can predict and generate physics-aware videos of the future state of a virtual environment — to help developers build next-generation robots and autonomous vehicles (AVs).

World foundation models, or WFMs, are as fundamental as large language models. They use input data, including text, image, video and movement, to generate and simulate virtual worlds in a way that accurately models the spatial relationships of objects in the scene and their physical interactions.

Announced today at CES, NVIDIA is making available the first wave of Cosmos WFMs for physics-based simulation and synthetic data generation — plus state-of-the-art tokenizers, guardrails, an accelerated data processing and curation pipeline, and a framework for model customization and optimization.

Researchers and developers, regardless of their company size, can freely use the Cosmos models under NVIDIA’s permissive open model license that allows commercial usage. Enterprises building AI agents can also use new open NVIDIA Llama Nemotron and Cosmos Nemotron models, unveiled at CES.

The openness of Cosmos’ state-of-the-art models unblocks physical AI developers building robotics and AV technology and enables enterprises of all sizes to more quickly bring their physical AI applications to market. Developers can use Cosmos models directly to generate physics-based synthetic data, or they can harness the NVIDIA NeMo framework to fine-tune the models with their own videos for specific physical AI setups.

Physical AI leaders — including robotics companies 1X, Agility Robotics and XPENG, and AV developers Uber and Waabi — are already working with Cosmos to accelerate and enhance model development.

Developers can preview the first Cosmos autoregressive and diffusion models on the NVIDIA API catalog, and download the family of models and fine-tuning framework from the NVIDIA NGC catalog and Hugging Face.

World Foundational Models for Physical AI

Cosmos world foundation models are a suite of open diffusion and autoregressive transformer models for physics-aware video generation. The models have been trained on 9,000 trillion tokens from 20 million hours of real-world human interactions, environment, industrial, robotics and driving data.

The models come in three categories: Nano, for models optimized for real-time, low-latency inference and edge deployment; Super, for highly performant baseline models; and Ultra, for maximum quality and fidelity, best used for distilling custom models.

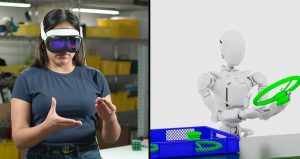

When paired with NVIDIA Omniverse 3D outputs, the diffusion models generate controllable, high-quality synthetic video data to bootstrap training of robotic and AV perception models. The autoregressive models predict what should come next in a sequence of video frames based on input frames and text. This enables real-time next-token prediction, giving physical AI models the foresight to predict their next best action.

Developers can use Cosmos’ open models for text-to-world and video-to-world generation. Versions of the diffusion and autoregressive models, with between 4 and 14 billion parameters each, are available now on the NGC catalog and Hugging Face.

Also available are a 12-billion-parameter upsampling model for refining text prompts, a 7-billion-parameter video decoder optimized for augmented reality, and guardrail models to ensure responsible, safe use.

To demonstrate opportunities for customization, NVIDIA is also releasing fine-tuned model samples for vertical applications, such as generating multisensor views for AVs.

Advancing Robotics, Autonomous Vehicle Applications

Cosmos world foundation models can enable synthetic data generation to augment training datasets, simulation to test and debug physical AI models before they’re deployed in the real world, and reinforcement learning in virtual environments to accelerate AI agent learning.

Developers can generate massive amounts of controllable, physics-based synthetic data by conditioning Cosmos with composed 3D scenes from NVIDIA Omniverse.

Waabi, a company pioneering generative AI for the physical world, starting with autonomous vehicles, is evaluating the use of Cosmos for the search and curation of video data for AV software development and simulation. This will further accelerate the company’s industry-leading approach to safety, which is based on Waabi World, a generative AI simulator that can create any situation a vehicle might encounter with the same level of realism as if it happened in the real world.

In robotics, WFMs can generate synthetic virtual environments or worlds to provide a less expensive, more efficient and controlled space for robot learning. Embodied AI startup Hillbot is boosting its data pipeline by using Cosmos to generate terabytes of high-fidelity 3D environments. This AI-generated data will help the company refine its robotic training and operations, enabling faster, more efficient robotic skilling and improved performance for industrial and domestic tasks.

In both industries, developers can use NVIDIA Omniverse and Cosmos as a multiverse simulation engine, allowing a physical AI policy model to simulate every possible future path it could take to execute a particular task — which in turn helps the model select the best of these paths.

Data curation and the training of Cosmos models relied on thousands of NVIDIA GPUs through NVIDIA DGX Cloud, a high-performance, fully managed AI platform that provides accelerated computing clusters in every leading cloud.

Developers adopting Cosmos can use DGX Cloud for an easy way to deploy Cosmos models, with further support available through the NVIDIA AI Enterprise software platform.

Customize and Deploy With NVIDIA Cosmos

In addition to foundation models, the Cosmos platform includes a data processing and curation pipeline powered by NVIDIA NeMo Curator and optimized for NVIDIA data center GPUs.

Robotics and AV developers collect millions or billions of hours of real-world recorded video, resulting in petabytes of data. Cosmos enables developers to process 20 million hours of data in just 40 days on NVIDIA Hopper GPUs, or as little as 14 days on NVIDIA Blackwell GPUs. Using unoptimized pipelines running on a CPU system with equivalent power consumption, processing the same amount of data would take over three years.

The platform also features a suite of powerful video and image tokenizers that can convert videos into tokens at different video compression ratios for training various transformer models.

The Cosmos tokenizers deliver 8x more total compression than state-of-the-art methods and 12x faster processing speed, which offers superior quality and reduced computational costs in both training and inference. Developers can access these tokenizers, available under NVIDIA’s open model license, via Hugging Face and GitHub.

Developers using Cosmos can also harness model training and fine-tuning capabilities offered by NeMo framework, a GPU-accelerated framework that enables high-throughput AI training.

Developing Safe, Responsible AI Models

Now available to developers under the NVIDIA Open Model License Agreement, Cosmos was developed in line with NVIDIA’s trustworthy AI principles, which include nondiscrimination, privacy, safety, security and transparency.

The Cosmos platform includes Cosmos Guardrails, a dedicated suite of models that, among other capabilities, mitigates harmful text and image inputs during preprocessing and screens generated videos during postprocessing for safety. Developers can further enhance these guardrails for their custom applications.

Cosmos models on the NVIDIA API catalog also feature an inbuilt watermarking system that enables identification of AI-generated sequences.

NVIDIA Cosmos was developed by NVIDIA Research. Read the research paper, “Cosmos World Foundation Model Platform for Physical AI,” for more details on model development and benchmarks. Model cards providing additional information are available on Hugging Face.

Learn more about world foundation models in an AI Podcast episode, airing Jan. 7, that features Ming-Yu Liu, vice president of research at NVIDIA.

Get started with NVIDIA Cosmos and join NVIDIA at CES. Watch the Cosmos demo and Huang’s keynote below:

See notice regarding software product information.