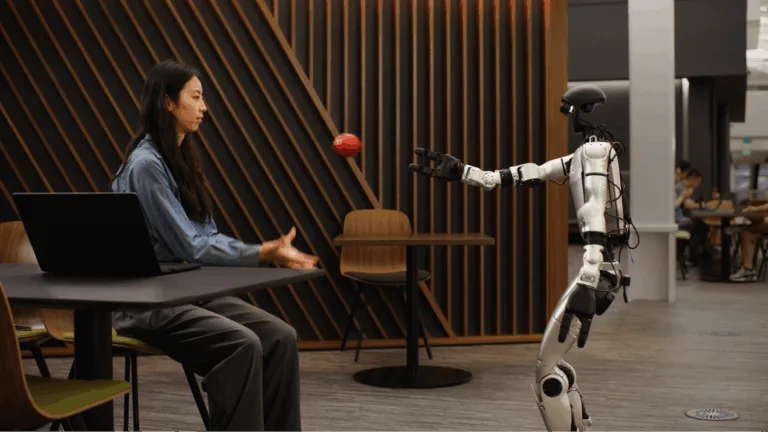

Transformers, with their attention-based architecture, have become the dominant choice for language models (LMs) due to their strong performance,…

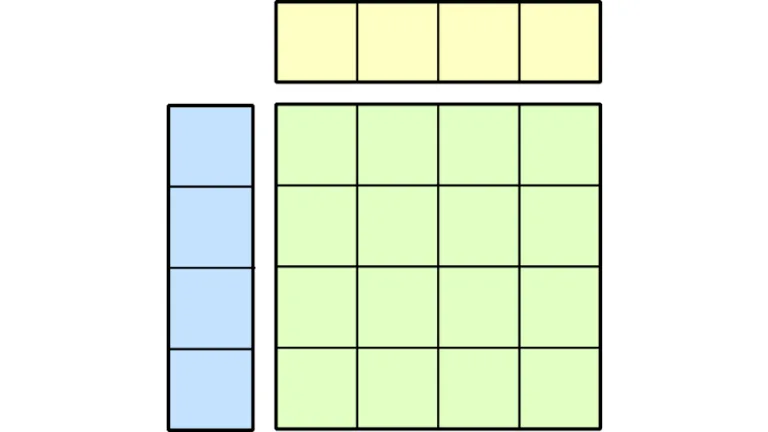

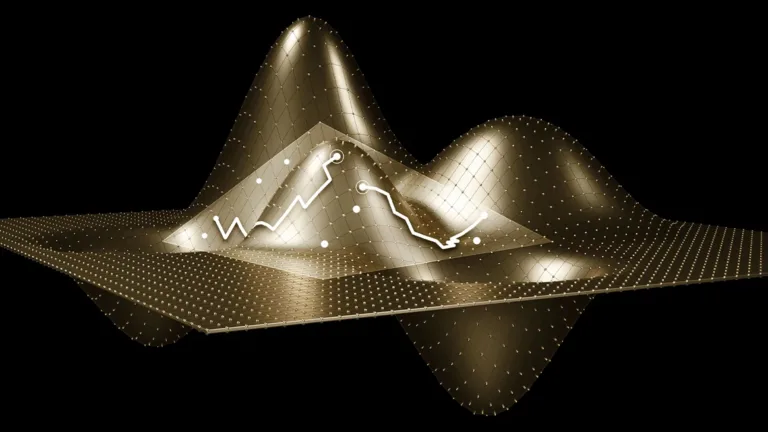

Transformers, with their attention-based architecture, have become the dominant choice for language models (LMs) due to their strong performance, parallelization capabilities, and long-term recall through key-value (KV) caches. However, their quadratic computational cost and high memory demands pose efficiency challenges. In contrast, state space models (SSMs) like Mamba and Mamba-2 offer constant…