Whether they’re looking at nanoscale electron behaviors or starry galaxies colliding millions of light years away, many scientists share a common challenge — they must comb through petabytes of data to extract insights that can advance their fields.

With the NVIDIA cuPyNumeric accelerated computing library, researchers can now take their data-crunching Python code and effortlessly run it on CPU-based laptops and GPU-accelerated workstations, cloud servers or massive supercomputers. The faster they can work through their data, the quicker they can make decisions about promising data points, trends worth investigating and adjustments to their experiments.

To make the leap to accelerated computing, researchers don’t need expertise in computer science. They can simply write code using the familiar NumPy interface or apply cuPyNumeric to existing code, following best practices for performance and scalability.

Once cuPyNumeric is applied, they can run their code on one or thousands of GPUs with zero code changes.

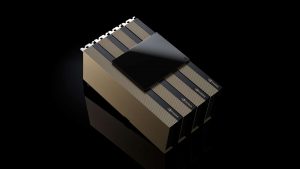

The latest version of cuPyNumeric, now available on Conda and GitHub, offers support for the NVIDIA GH200 Grace Hopper Superchip, automatic resource configuration at run time and improved memory scaling. It also supports HDF5, a popular file format in the scientific community that helps efficiently manage large, complex data.

Researchers at the SLAC National Accelerator Laboratory, Los Alamos National Laboratory, Australia National University, UMass Boston, the Center for Turbulence Research at Stanford University and the National Payments Corporation of India are among those who have integrated cuPyNumeric to achieve significant improvements in their data analysis workflows.

Less Is More: Limitless GPU Scalability Without Code Changes

Python is the most common programming language for data science, machine learning and numerical computing, used by millions of researchers in scientific fields including astronomy, drug discovery, materials science and nuclear physics. Tens of thousands of packages on GitHub depend on the NumPy math and matrix library, which had over 300 million downloads last month. All of these applications could benefit from accelerated computing with cuPyNumeric.

Many of these scientists build programs that use NumPy and run on a single CPU-only node — limiting the throughput of their algorithms to crunch through increasingly large datasets collected by instruments like electron microscopes, particle colliders and radio telescopes.

cuPyNumeric helps researchers keep pace with the growing size and complexity of their datasets by providing a drop-in replacement for NumPy that can scale to thousands of GPUs. cuPyNumeric doesn’t require code changes when scaling from a single GPU to a whole supercomputer. This makes it easy for researchers to run their analyses on accelerated computing systems of any size.

Solving the Big Data Problem, Accelerating Scientific Discovery

Researchers at SLAC National Accelerator Laboratory, a U.S. Department of Energy lab operated by Stanford University, have found that cuPyNumeric helps them speed up X-ray experiments conducted at the Linac Coherent Light Source.

A SLAC team focused on materials science discovery for semiconductors found that cuPyNumeric accelerated its data analysis application by 6x, decreasing run time from minutes to seconds. This speedup allows the team to run important analyses in parallel when conducting experiments at this highly specialized facility.

By using experiment hours more efficiently, the team anticipates it will be able to discover new material properties, share results and publish work more quickly.

Other institutions using cuPyNumeric include:

Australia National University, where researchers used cuPyNumeric to scale the Levenberg-Marquardt optimization algorithm to run on multi-GPU systems at the country’s National Computational Infrastructure. While the algorithm can be used for many applications, the researchers’ initial target is large-scale climate and weather models.

Los Alamos National Laboratory, where researchers are applying cuPyNumeric to accelerate data science, computational science and machine learning algorithms. cuPyNumeric will provide them with additional tools to effectively use the recently launched Venado supercomputer, which features over 2,500 NVIDIA GH200 Grace Hopper Superchips.

Stanford University’s Center for Turbulence Research, where researchers are developing Python-based computational fluid dynamics solvers that can run at scale on large accelerated computing clusters using cuPyNumeric. These solvers can seamlessly integrate large collections of fluid simulations with popular machine learning libraries like PyTorch, enabling complex applications including online training and reinforcement learning.

UMass Boston, where a research team is accelerating linear algebra calculations to analyze microscopy videos and determine the energy dissipated by active materials. The team used cuPyNumeric to decompose a matrix of 16 million rows and 4,000 columns.

National Payments Corporation of India, the organization behind a real-time digital payment system used by around 250 million Indians daily and expanding globally. NPCI uses complex matrix calculations to track transaction paths between payers and payees. With current methods, it takes about 5 hours to process data for a one-week transaction window on CPU systems. A trial showed that applying cuPyNumeric to accelerate the calculations on multi-node NVIDIA DGX systems could speed up matrix multiplication by 50x, enabling NPCI to process larger transaction windows in less than an hour and detect suspected money laundering in near real time.

To learn more about cuPyNumeric, see a live demo in the NVIDIA booth at the Supercomputing 2024 conference in Atlanta, join the theater talk in the expo hall and participate in the cuPyNumeric workshop.

Watch the NVIDIA special address at SC24.