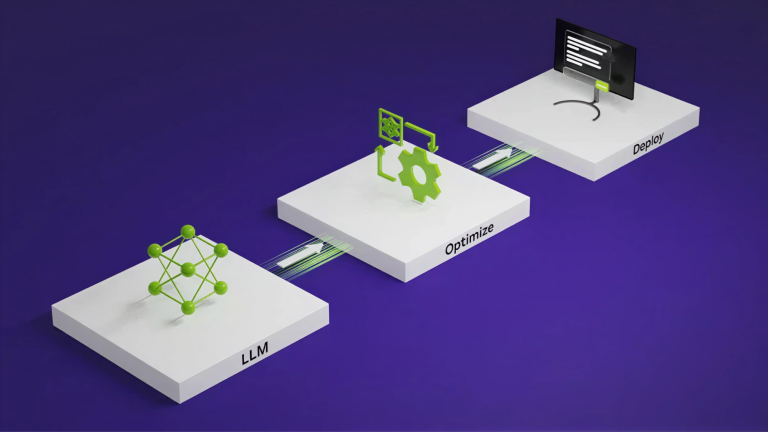

The open-source llama.cpp code base was originally released in 2023 as a lightweight but efficient framework for performing inference on Meta Llama models….

The open-source llama.cpp code base was originally released in 2023 as a lightweight but efficient framework for performing inference on Meta Llama models. Built on the GGML library released the previous year, llama.cpp quickly became attractive to many users and developers (particularly for use on personal workstations) due to its focus on C/C++ without the need for complex dependencies.